Summary

Bank of England survey reveals ML technique ‘Boosting’ dominates financial AI

Boosting balances accuracy with interpretability that financial markets need

Concrete applications across bond trading, payments, HFT, custom platforms

Real case studies show measurable performance improvements in production

Elegant established solutions often outperform flashier complex alternatives

Why do so many professional investors and trading desks still favour decision‑tree‑based algorithms in an era of transformer models and generative AI? It might surprise you to learn that some of the most effective machine‑learning tools on Wall Street were invented more than two decades ago. Recent surveys and case studies reveal that gradient‑boosting models—often shortened to boosting—are the most popular AI model type in UK financial services and have become integral to a wide range of capital‑markets applications.

The quiet popularity of boosting

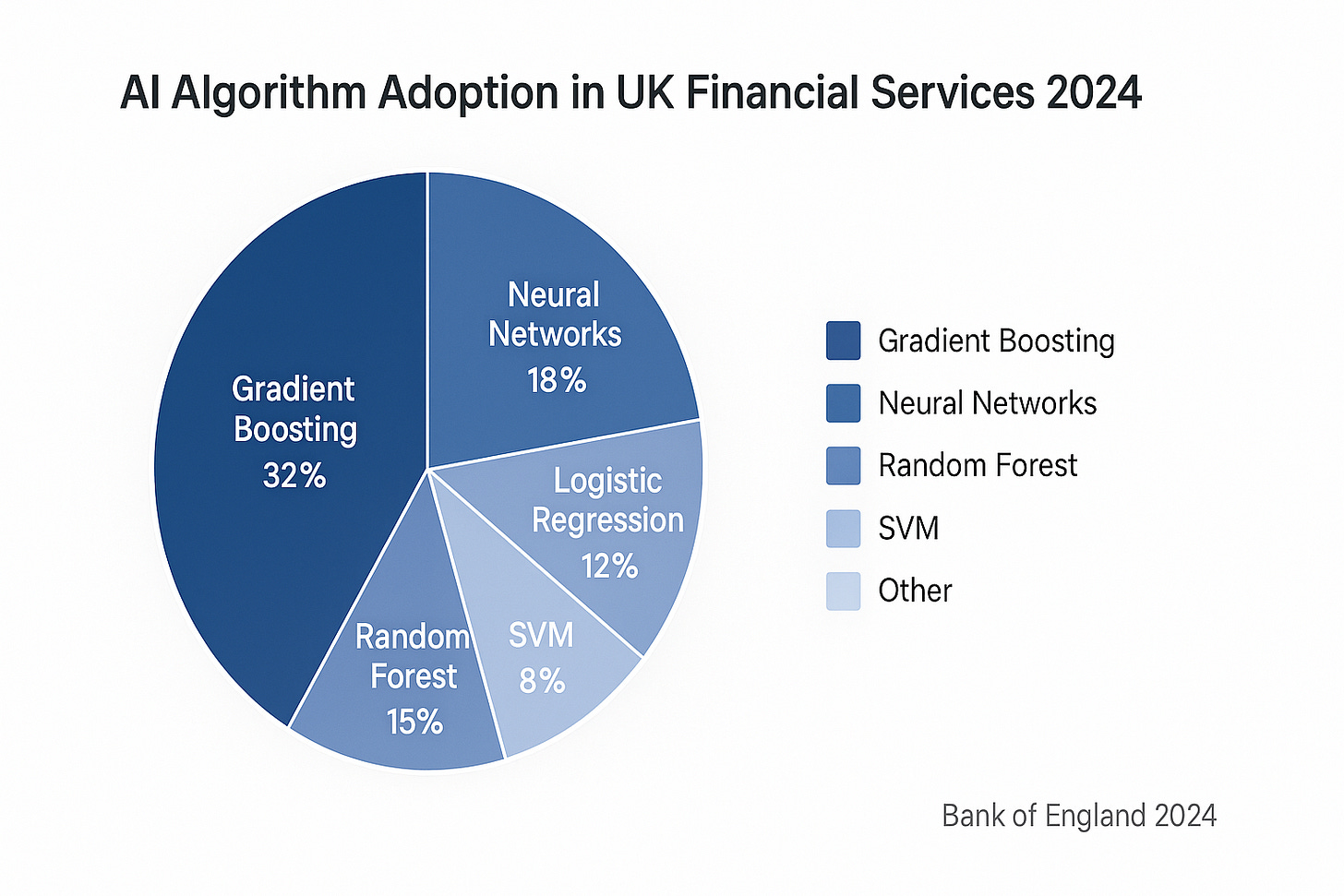

The Bank of England’s 2024 survey of AI use in financial services found that gradient‑boosting models account for around 32 % of all AI applications reported by regulated firms, far more than any other model type[1]. That figure highlights how widely boosting is relied upon across trading, risk management and analytics. Yet for many outside the data‑science community, boosting remains opaque: you might see it referenced in product brochures as “XGBoost,” “LightGBM” or “CatBoost,” but what does it do and why is it so prevalent?

In simple terms, boosting is an ensemble technique—a way of combining many weak, easily interpretable models into a single strong predictor. Think of it like assembling a panel of junior analysts to forecast bond prices. Each analyst reviews the data and makes a prediction. The first one will almost certainly make mistakes, so the second analyst is asked to pay special attention to where the first fell short, and the third analyst focuses on what both predecessors missed, and so on. By the time you average the opinions of all analysts, you end up with a consensus that is often more accurate and robust than any single individual could produce. That is precisely how gradient‑boosting algorithms work: they train simple decision‑tree models sequentially, each one correcting the residual errors of the previous, and then aggregate their votes to generate predictions. The result is a model that performs well on messy, structured datasets and offers transparency through feature importance scores—a crucial benefit in a regulated industry.

From trading desks to payment systems: concrete examples

Several recent case studies illustrate how boosting is being applied across the capital‑markets ecosystem.

Price discovery in bond trading. Fixed‑income analytics provider Overbond developed an AI‑adjusted price‑aggression tool for buy‑side traders. The model analyses dealer axes and trade history using gradient‑boosting algorithms to rate dealers’ quotes as aggressive, neutral or passive[2]. Instead of relying on anecdotal relationships, portfolio managers receive objective signals on which counterparties are quoting most competitively—helping them source liquidity in fragmented markets.

Anomaly detection in high‑value payment systems. A Bank of Canada working paper describes a two‑stage framework for flagging unusual transactions in the Lynx real‑time gross‑settlement system. The first stage uses a LightGBM classifier to label payments as likely morning or afternoon transactions; this gradient‑boosting model out‑performed logistic regression by up to 44 % and correctly detected 92.2 % of artificially manipulated transactions[3]. By filtering the flood of payments down to a manageable set for human review, boosting helps protect a critical piece of financial infrastructure.

Liquidity and yield‑curve forecasting. In its February 2025 report on fixed‑income markets, RoZetta Technology notes that ensemble methods such as XGBoost provide accurate predictions of bond‑market liquidity, while gradient‑boosting techniques improve yield‑curve estimation[4]. These models ingest macroeconomic indicators, trade volumes and credit spreads to generate forward curves that feed into pricing, risk management and portfolio construction.

High‑speed electronic trading. Trading firms operating at sub‑millisecond speeds need machine‑learning models that can generate signals almost instantaneously. Xelera Technologies’ research shows that XGBoost, LightGBM and CatBoost—all gradient‑boosting frameworks—can deliver micro‑second inference latencies when deployed on FPGA‑based accelerators[5]. This makes them attractive alternatives to deep neural networks, which often have longer inference times. A follow‑up benchmark found that offloading LightGBM inference to an accelerator reduced latency to around 1.13 µs, more than 10× faster than CPU‑based solutions[6]. For market‑makers and quantitative hedge funds, such reductions can be the difference between profitable and unprofitable trades.

Custom trading platforms. Innowise, a software developer, built a bespoke trading platform for an Irish proprietary trading firm. The system uses CatBoost and XGBoost models to produce trading signals and monitor multiple exchanges in real time. According to the case study, the ML‑enabled platform cut information‑processing delays from 2–3 s to 34 ms (a 97 % improvement) and improved the firm’s responsiveness to market opportunities[7].

Why boosting resonates in capital markets

There are good reasons why boosting is so widely adopted in finance despite the excitement around more complex AI models:

It balances accuracy and transparency. Unlike deep neural networks, decision‑tree ensembles provide feature importance measures that quantify which variables (e.g., term structure, liquidity score, credit spread) drive predictions. Regulators and risk managers often require this interpretability to validate models and explain decisions.

It handles messy financial data. Capital‑markets datasets are full of missing values, outliers and non‑linear relationships. Gradient‑boosting algorithms excel at capturing these patterns without extensive data preprocessing.

It is computationally efficient. Boosting models train relatively quickly and, as the Xelera benchmarks show, can run at micro‑second inference speeds when properly optimised[5][6]. This efficiency matters in high‑frequency trading and real‑time risk monitoring.

It lends itself to hybrid approaches. Many firms pair gradient‑boosting models with domain‑specific features or combine them with neural networks in hybrid ensembles. For example, a yield‑curve forecasting system might use boosting to model short‑term dynamics while a long‑short‑term memory (LSTM) network handles longer‑term trends.

Toward a balanced view

Boosting is not a magic bullet. Like any model, it can overfit, pick up spurious patterns or amplify biases in training data. The Bank of England’s survey emphasises the importance of governance: most firms using AI employ some form of model explainability and allocate accountability for AI outputs to senior leadership[1]. That organisational framework is essential when deploying boosting models that may inform trading decisions or risk assessments.

At the same time, the examples above demonstrate how boosting can deliver tangible benefits—better price discovery, faster risk monitoring, more accurate yield‑curve forecasts and ultra‑low‑latency trading decisions. For practitioners, the takeaway is that established, interpretable techniques often provide the best return on investment, especially when paired with rigorous data quality and model‑validation processes.

Conclusion

In a world where hype often overshadows substance, gradient‑boosting reminds us that elegant solutions can still outperform flashier alternatives. By iteratively correcting their own mistakes, boosting models embody a mindset familiar to successful investors: learning from past errors to make better decisions in the future. As capital‑markets firms continue to integrate AI into their workflows, boosting stands as a proven, versatile tool—quietly delivering value without the fanfare.

References:

[1] Bank of England – Artificial intelligence in UK financial services (2024). https://www.bankofengland.co.uk/report/2024/artificial-intelligence-in-uk-financial-services-2024

[2] WatersTechnology – Getting aggressive: Overbond uses AI to assess dealer axes. https://www.waterstechnology.com/trading-tech/7951732/getting-aggressive-overbond-uses-ai-to-assess-dealer-axes

[3] Bank of Canada – Finding a needle in a haystack: A Machine Learning Framework for Anomaly Detection in Payment Systems. https://publications.gc.ca/pub?id=9.938493&sl=0

[4] RoZetta Technology – Harnessing AI and ML to Transform Fixed Income Markets. https://www.rozettatechnology.com/harnessing-ai-and-ml-to-transform-fixed-income-markets-opportunities-and-challenges/

[5] Xelera Technologies – Ultra‑low Latency XGBoost with Xelera Silva. https://www.xelera.io/post/ultra-low-latency-xgboost-with-xelera-silva

[6] Xelera Technologies – Machine Learning Inference for HFT. https://www.xelera.io/post/machine-learning-inference-for-hft-how-xelera-silva-and-icc-deliver-ultra-low-latency-trading-decisions

[7] Innowise – Machine Learning for Stock Trading. https://innowise.com/case/trading-software-development/