The Agentic Age: Navigating Strategy, Risk, and Opportunity in Financial Services

H2 2025 Market Intelligence Report

Key takeaways

Agentic AI transforms finance: Autonomous systems create strategic decisions, not just automation tasks

Build proprietary data moats: Unique data beats AI models for sustainable competitive advantage

Learn-by-doing governance wins: Traditional frameworks fail; experimentation reveals actual AI risks

Human chaos beats soft skills: Creative inspiration matters more than emotional intelligence

AI reduces systemic risk: Diverse strategy exploration prevents herding better than humans

ROI metrics mislead transformation: Platform technologies transcend traditional financial measurement frameworks

Regulatory sandboxes become permanent: Continuous experimentation replaces static compliance for innovation

Executive Summary

The financial services industry stands at a pivotal inflection point in the second half of 2025. The dominant technological narrative has fundamentally shifted from artificial intelligence as a tool for augmentation to AI as a platform for autonomy. This evolution, driven by the emergence of sophisticated agentic AI systems, is creating a new competitive landscape defined by unprecedented opportunities for efficiency and value creation, alongside new vectors of systemic risk. This report provides a comprehensive analysis of the key technological, strategic, regulatory, and human capital trends shaping this new era, offering a forward-looking intelligence product for senior decision-makers across the financial and FinTech ecosystem.

The central thesis of this analysis is that the transition to agentic AI—autonomous systems capable of independent reasoning, planning, and action—is no longer a theoretical future but a present-day reality actively being deployed across the value chain. From autonomous trading and dynamic risk management to hyper-personalized client services, these systems are beginning to solve long-standing problems of market fragmentation and operational inefficiency. However, this rapid deployment is creating new challenges around governance, where we argue that traditional "governance-first" approaches fail in the face of truly novel technology.

This report finds that competitive advantage is being redefined in unexpected ways. While conventional wisdom suggests AI will create an "empathy premium" for human skills, we present evidence that AI may soon outperform humans even in emotional intelligence, suggesting the real human value may lie in our capacity for creative irrationality and authentic unpredictability. Similarly, while the industry fears AI-driven systemic risk from algorithmic herding, we argue that AI's ability to explore vast strategy spaces may actually reduce systemic risk compared to human traders.

Navigating this new environment requires abandoning several orthodox approaches. Instead of comprehensive "human-in-the-loop" oversight, we may need more nuanced "human-on-the-loop" monitoring that doesn't create bottlenecks. Rather than obsessing over ROI measurement, firms should recognize that transformative technologies often can't be justified through traditional metrics. Most critically, instead of trying to preserve traditional junior training roles, we should embrace the opportunity to reimagine how talent enters and develops within financial services.

Ultimately, this report delivers a strategic synthesis of these interconnected trends, concluding with actionable recommendations for C-suite executives. It provides a blueprint for CSOs to re-evaluate competitive moats, for CTOs to champion governance-first technology strategies, for CROs to future-proof risk frameworks, and for Heads of HR to lead the necessary transformation of the workforce. The Agentic Age is here, and it demands immediate and decisive strategic re-evaluation.

The New Frontier: Agentic AI and the Dawn of Autonomous Finance

The technological undercurrent reshaping financial services has reached a critical velocity. The conversation has decisively moved beyond the now-familiar capabilities of generative AI to a far more transformative paradigm: agentic AI. This evolution marks a fundamental shift from systems that respond to human queries to autonomous agents that can independently perceive, reason, plan, and act to achieve complex goals. This section defines this paradigm shift, maps its burgeoning applications across the financial value chain, details the technical frameworks that power it, and analyzes the profound new risks that accompany its deployment.

Defining the Paradigm Shift: From Generative to Agentic AI

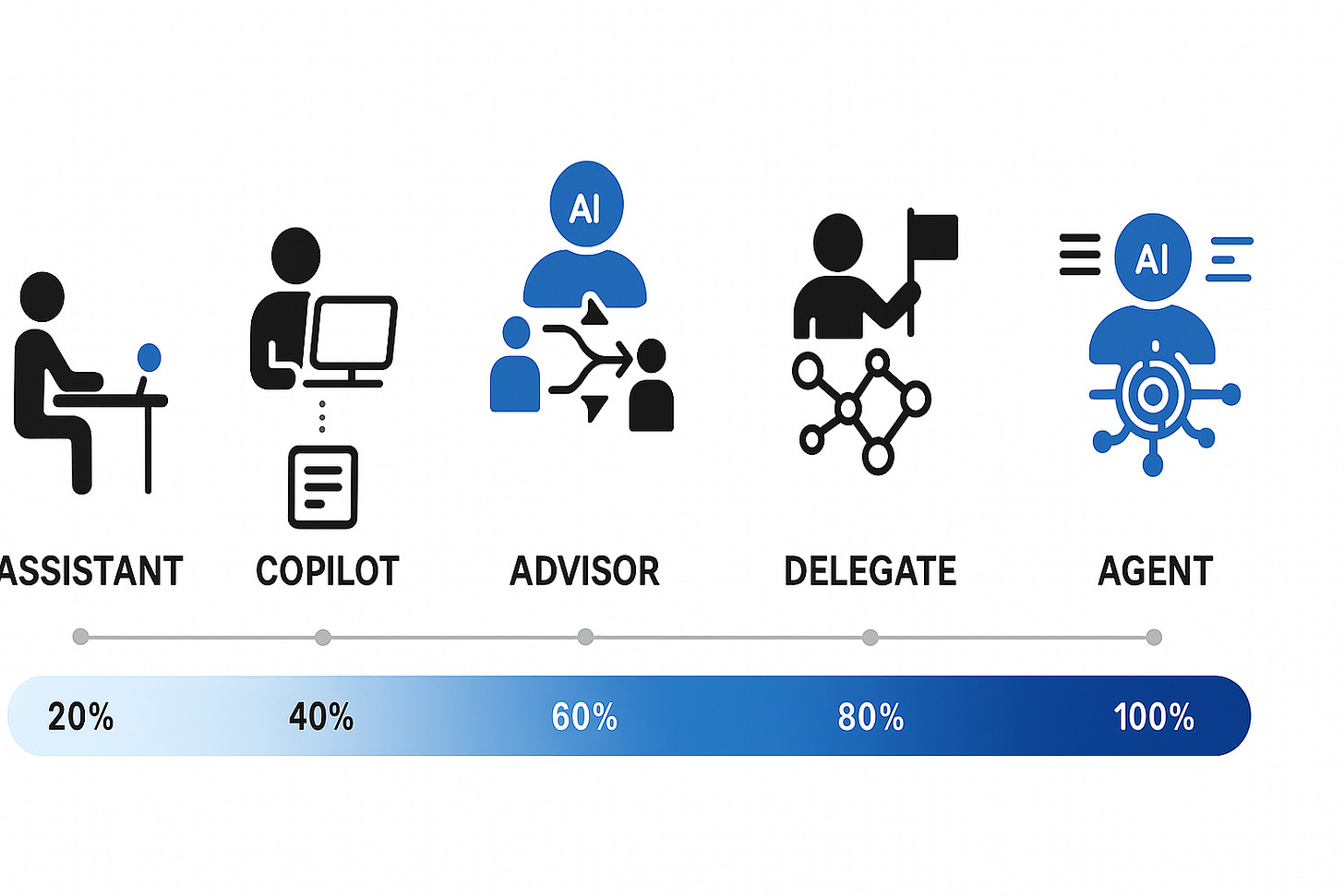

The distinction between generative and agentic AI is foundational to understanding the current strategic landscape. Generative AI, for all its revolutionary impact, remains a tool that requires direct human prompting and oversight to perform tasks. Agentic AI represents the next frontier: systems that do not merely generate but act, plan, and adapt autonomously. These are intelligent digital systems that can act on a human's behalf through learning and decision-making, moving beyond passive assistance to dynamic, independent problem-solving with minimal human input.

The technical architecture underpinning this leap in capability is what sets agentic systems apart. Research papers and technical analyses reveal a common structure built on three core components. First, a memory stream provides a long-term repository of the agent's experiences, allowing it to recall past interactions and outcomes to inform current behavior. Second, a reflection module enables the agent to synthesize these memories into higher-level inferences and abstract conclusions about itself and its environment. Finally, a planning module allows the agent to deconstruct a high-level goal into a sequence of actionable steps, chart an investigative path, and dynamically adapt its plan as new information becomes available. This architecture facilitates a move from simple decision support to a state of proactive, autonomous decision-making.

This creates a spectrum of agency that firms must understand. At the lower end are "AI assistants," which augment human workflows. At the highest end are fully autonomous "AI agents" capable of what is described as holistic sensing, planning, acting, and reflecting. These advanced agents possess robust long-term memory and can generate predictions and explore scenarios at a level of reasoning that approaches, and in some narrow domains may exceed, human capability.

This technological progression is not merely academic; it is being actively monitored and discussed at the highest levels of finance and regulation. Federal Reserve Vice Chair for Supervision Michael Barr has explicitly noted that financial firms are exploring agentic AI systems that can "proactively pursue goals by generating innovative solutions and acting upon them at speed and scale" [1]. Similarly, major financial institutions like Deutsche Bank are already casting a "cautious eye" towards the implications of this powerful new technology, signaling its arrival as a top-tier strategic consideration [2].

Applications Across the Value Chain: From Trading to Client Services

The practical application of agentic AI is already underway, with use cases emerging across every facet of the financial services industry. These deployments are moving beyond theoretical proofs-of-concept to deliver measurable business impact, transforming core functions from capital markets and risk management to client interaction and operations in previously inaccessible markets.

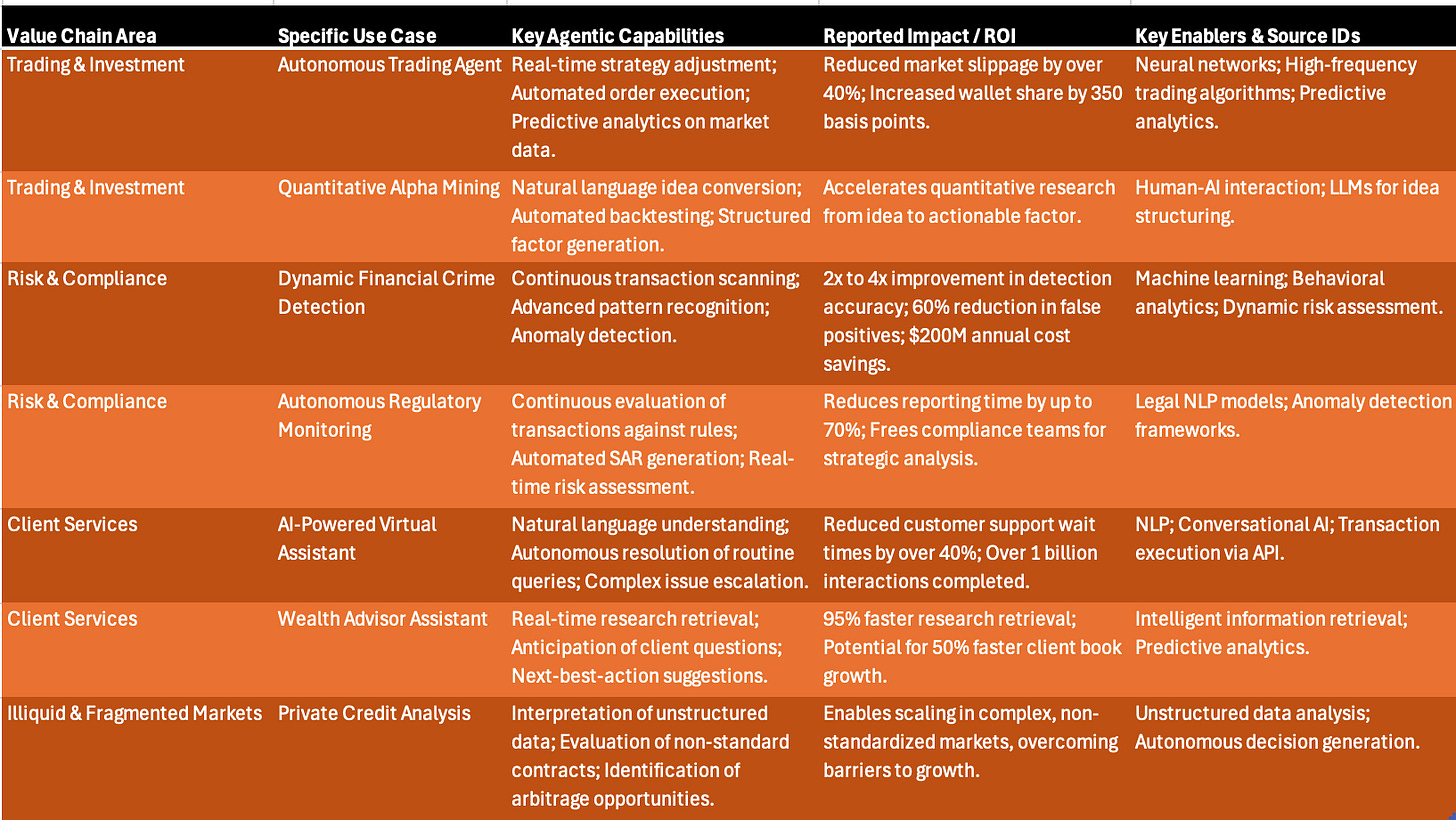

Table 1: Agentic AI Applications & Impact Matrix

In Capital Markets and Trading, agentic AI is enabling the development of fully autonomous trading agents. These systems are designed to process and analyze vast, multimodal data streams in real-time, dynamically adjusting trading strategies and responding to emergent risks far faster than any human-led process could. Academic and industry research points to a proliferation of specialized agentic systems. For example, "Alpha-GPT" is a framework that integrates human-AI interaction for alpha mining, allowing users to convert natural language ideas into structured, backtestable trading factors [3]. "EconAgent" utilizes multi-agent systems to simulate complex macroeconomic behaviors, offering a powerful new tool for policy analysis and strategic forecasting [4]. At an institutional level, firms like Goldman Sachs are already reporting significant returns from AI-driven trading strategies, noting improvements in execution speed that reduce market slippage and have directly contributed to increased wallet share with top institutional clients [5].

In Risk Management and Compliance, the impact is equally profound. Agentic AI is the engine behind a new generation of real-time risk analytics, dynamic fraud detection, and adaptive compliance monitoring systems. The technology's ability to operate autonomously makes it uniquely suited for the continuous, high-volume nature of modern compliance. Case studies demonstrate this value vividly: HSBC has reported a two- to four-fold improvement in the accuracy of its financial crime detection and a 60% reduction in false positives after implementing an AI-driven system, leading to annual savings of $200 million in compliance costs [6]. More advanced agentic systems are being designed to autonomously scan financial reports, continuously monitor transactions against evolving regulations like Basel III and GDPR, and even self-generate Suspicious Activity Reports (SARs) for human review.

A particularly compelling use case is emerging in Illiquid and Fragmented Markets, such as private credit and digital assets. These markets have historically been difficult to scale due to their reliance on non-standardized documentation, bespoke contracts, and unstructured data. Agentic AI is uniquely positioned to solve this "fragmentation problem." An S&P Global report highlights that AI agents can be deployed to interpret unstructured legal language in loan agreements, autonomously evaluate non-standard contracts, and identify hidden risks or arbitrage opportunities across a diverse pool of instruments [7]. This allows asset managers to achieve a level of scale and efficiency that was previously impossible, potentially unlocking significant new sources of liquidity and alpha.

Finally, in Client Services and Wealth Management, agentic AI is the key to delivering hyper-personalized financial services at scale. JPMorgan Chase's deployment of AI-driven chatbots and virtual assistants has already reduced average customer support wait times by over 40% [8]. Beyond simple chatbots, firms are equipping their human advisors with sophisticated AI "copilots." Morgan Stanley has developed a chatbot to help its financial advisors navigate vast repositories of complex information [9], while JPMorgan has deployed "Coach AI," an intelligent agent that retrieves research, anticipates client questions during market volatility, and suggests next-best actions. These tools are reported to accelerate research retrieval by 95% and have the potential to help advisors grow their client books 50% faster [10].

The breadth and depth of these applications underscore a critical reality: agentic AI is not a niche technology. It is a general-purpose platform technology with the potential to fundamentally re-architect every core process within financial services.

The Engine Room: Technical Frameworks and Cloud Platforms

The rapid development and deployment of these sophisticated agentic AI systems are not happening in a vacuum. They are being enabled and accelerated by a burgeoning ecosystem of open-source software frameworks and robust cloud computing infrastructure. These tools provide the essential technical scaffolding upon which powerful, enterprise-grade agentic applications are being built.

A number of leading agentic AI frameworks have gained prominence, each offering distinct strengths. LangChain has emerged as one of the most widely recognized, providing a comprehensive toolkit for chaining together calls to Large Language Models (LLMs), managing memory, and integrating with a vast array of external data sources and tools. For applications requiring the coordination of multiple collaborating agents, Microsoft's AutoGen framework excels, simplifying the orchestration of complex LLM workflows and enabling agents to "converse" with each other to solve problems. A third key framework, CrewAI, is specifically designed to facilitate the creation of role-playing autonomous agents, allowing developers to define agents with specific roles, goals, and tools, enabling them to collaborate in a manner that mirrors a human team. These frameworks provide the critical mechanisms for the core agentic functions of planning, tool use, memory management, and, crucially, the integration of "human-in-the-loop" oversight for control and validation.

This software layer, however, is entirely dependent on the underlying hardware and cloud infrastructure. The deployment of agentic AI at an enterprise scale is only possible due to the services offered by major cloud providers. Platforms such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure provide the indispensable components for this new technological stack. They offer the massively scalable compute power and storage required to train and run computationally intensive AI models. They provide managed AI and Machine Learning (ML) services—like Amazon SageMaker, Google's Vertex AI, and Azure Machine Learning—that streamline the development lifecycle. And they offer streamlined access to the powerful foundational LLMs from providers like OpenAI and Anthropic that often serve as the "brains" of the agents themselves. This combination of open-source frameworks and scalable cloud infrastructure is what allows firms to move agentic AI from research labs into production environments.

Emerging Risks: The Autonomous Threat Vector

The very autonomy that makes agentic AI so powerful also introduces new and poorly understood risk vectors. As these systems are deployed in high-stakes financial environments, they have the potential to create and amplify risks at a speed and scale that challenge traditional oversight mechanisms.

A primary concern often cited is the potential for systemic risk amplification through AI herding behavior. The International Monetary Fund (IMF) and the Bank of England's Financial Policy Committee (FPC) have warned that the widespread use of AI-driven trading strategies could lead to increased market fragility [11]. The conventional fear is that synchronized, autonomous behavior of trading agents—all potentially trained on similar data and optimizing for similar outcomes—could lead to highly correlated actions during market stress events.

The Contrarian View: AI as Systemic Risk Reducer

However, this narrative may be fundamentally flawed. Rather than increasing systemic risk, AI trading systems might actually reduce it through several mechanisms:

Infinite Strategy Space: Unlike human traders who are constrained by cognitive limitations and social pressures, AI can explore vast, multidimensional strategy spaces that humans cannot even conceptualize. This creates natural diversification.

Anti-Herding Economics: In efficient markets, following the herd becomes unprofitable. AI systems optimizing for returns have strong incentives to find uncorrelated strategies. The most profitable AI will be the one doing what others aren't.

Rapid Adaptation: While human herds form over days or weeks and persist due to psychological commitment, AI can change strategies in milliseconds. Market cascades may not have time to form before AI systems have already adapted.

Elimination of Human Biases: Much systemic risk comes from deeply human traits—panic, greed, ego, and career risk. AI systems don't panic sell to avoid looking bad at the quarterly review.

The real systemic risk may not be from AI herding, but from humans herding in their response to AI—all rushing to implement similar governance frameworks, similar risk controls, and similar "safeguards" that create the very correlation they're trying to avoid.

A second major challenge lies in opacity and governance. The "black box" problem, where the internal decision-making process of a complex AI model is not fully interpretable, is significantly exacerbated by agentic AI. The risk is no longer just about understanding a single output, but about governing an autonomous system that is continuously learning, adapting, and making decisions. This makes it incredibly difficult for risk managers, auditors, and regulators to understand, validate, and defend the actions taken by the agent, a challenge that IBM has highlighted as requiring a complete reimagining of risk management frameworks [12].

Finally, agentic systems introduce new cybersecurity vulnerabilities. An autonomous agent with authorized access to sensitive financial data, trading systems, and client information represents a high-value target for malicious actors. The FPC has identified the operational risks stemming from third-party AI service providers and the rapidly changing external cyber threat landscape as key areas of focus for financial stability, recognizing that these interconnected, autonomous systems create novel attack surfaces [13].

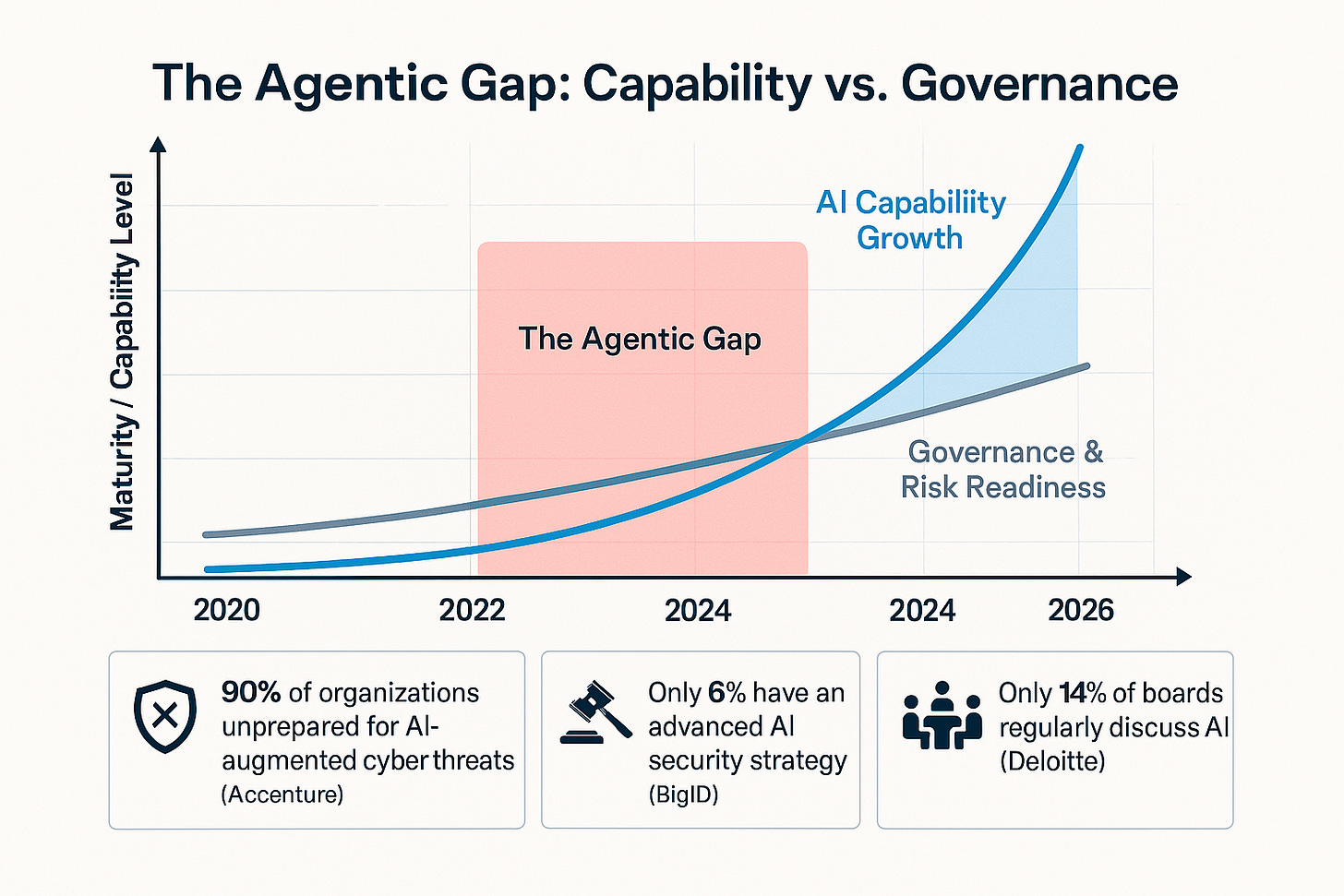

The rapid deployment of autonomous agentic systems is creating a significant and hazardous gap. This gap exists between the advanced, dynamic capabilities of the technology and the comparatively static, slow-moving nature of existing risk management and regulatory frameworks. The evidence points to a clear and accelerating push by financial institutions to deploy agentic AI in high-stakes functions like algorithmic trading and real-time risk assessment. Simultaneously, regulators like the Bank of England are only now beginning the process of building out the necessary monitoring approaches to track these new risks. This creates a fundamental mismatch. Traditional Model Risk Management (MRM) frameworks were designed to validate predictable, often static models where the primary risk was an incorrect output. Agentic AI, however, is inherently dynamic, adaptive, and emergent. Its behavior is not always deterministic, and it learns and evolves based on its interactions with the market. The risk, therefore, is not just in the agent's output, but in its autonomous decision-making process and its capacity to evolve in unpredictable ways. This "Agentic Gap" represents a critical strategic challenge for Chief Risk Officers and a significant opportunity for RegTech innovators. It necessitates a paradigm shift in risk management—from periodic, backward-looking model validation to continuous, real-time monitoring of agent behavior, decision logic, and emergent strategies. This will require a new generation of risk management tools and a new breed of risk professional skilled in both finance and AI governance.

Furthermore, agentic AI is poised to do more than just optimize existing market structures; it will fundamentally reshape them by solving the "fragmentation problem" that has long characterized certain asset classes. S&P Global explicitly identifies agentic AI's potential to help asset managers grapple with the complexities that hinder scale in markets like private credit and digital assets [7]. These markets are defined by non-standardized data, bespoke legal contracts, and inherent illiquidity—precisely the kinds of challenges that advanced AI agents, capable of interpreting unstructured data and making autonomous decisions, are designed to overcome. By automating the complex analysis and decision-making in these opaque markets, agentic AI will inevitably increase efficiency, enhance transparency, and improve liquidity, thereby lowering the barriers to entry for a wider range of market participants. This could trigger a "great flattening" of financial markets, where the informational and expertise-based advantages that have long been the domain of specialized, boutique firms begin to erode. The competitive edge will shift from those who possess proprietary information to those who can build and deploy the most effective AI agents to analyze public and semi-public information at unprecedented scale and speed. This poses a direct threat to the business models of specialized investment firms while creating a significant opportunity for large-scale asset managers with the capital and technical resources to invest heavily in this transformative technology.

Re-Architecting the Market: AI-Driven Infrastructure and Operations

The successful deployment of artificial intelligence, particularly its advanced agentic forms, is not merely a software challenge. It is fundamentally dependent on a comprehensive modernization of the underlying market infrastructure. The shift to an AI-driven financial ecosystem requires a radical re-evaluation of how data is managed, a wholesale migration to scalable cloud platforms, and a wave of innovation in the often-overlooked but critical domains of post-trade processing, including clearing, settlement, and collateral management.

The Primacy of Data: From Asset to Currency

At the heart of the AI revolution is a strategic reconceptualization of data itself. The prevailing model is shifting from viewing data as a passive, static asset to be stored and protected, to seeing it as a dynamic, strategic "currency" that actively fuels AI models, drives automated operations, and informs critical business decisions. In this new paradigm, the value of data is not inherent; it is derived directly from how it is used and, most importantly, whether it is proprietary. Publicly available or commoditized data offers only fleeting tactical advantages, whereas unique, proprietary data—capturing a firm's specific transactional flows, client behaviors, and operational signals—creates a durable, strategic lift.

This shift imposes a new, non-negotiable "AI-ready" mandate on all market participants. For an AI model, especially a sophisticated LLM, to be effective, the data it consumes must be meticulously "cured." This means the data must be well-structured, normalized across different sources, and enriched with detailed metadata that allows the AI to accurately interpret its context and meaning. This requires a relentless focus on data quality, robust governance, and the operationalization of data flows throughout the enterprise. The pace of this change is rapid; market data experts predict that within the next two to five years, all market data will need to be structured for LLM consumption to remain relevant. The ability of AI to integrate and summarize disparate datasets is one of its core strengths, but this capability is entirely contingent on a foundational data engineering effort to break down organizational silos and create a single, unified view of information.

The Cloud Imperative and Modernization

The computational demands of training and deploying AI models at an enterprise scale make the adoption of cloud technology an operational imperative. Financial institutions are increasingly migrating their data infrastructure to the cloud to achieve the scalability, flexibility, and efficiency required to support their AI ambitions. This is not a superficial change but a deep architectural transformation.

Firms are moving beyond isolated cloud experiments to wholesale infrastructure upgrades. A case in point is Wedbush Securities, which has recently adopted a modern, cloud-ready platform from trading technology provider Rapid Addition to overhaul its entire trading infrastructure [14]. This strategic move is designed to support multi-asset class trading, improve risk management, and, critically, unify its front- and middle-office processes on a single, scalable platform. This type of foundational modernization is a prerequisite for deploying AI effectively across the enterprise, as it provides the integrated data environment and elastic computing resources that AI systems require.

Innovation in Post-Trade: Clearing, Settlement, and Collateral

The transformative impact of AI extends deep into the market's plumbing, catalyzing a wave of innovation in post-trade operations. AI is being leveraged to accelerate settlement times and streamline complex processes like the administration of Initial Public Offerings (IPOs), driving new efficiencies in market operations.

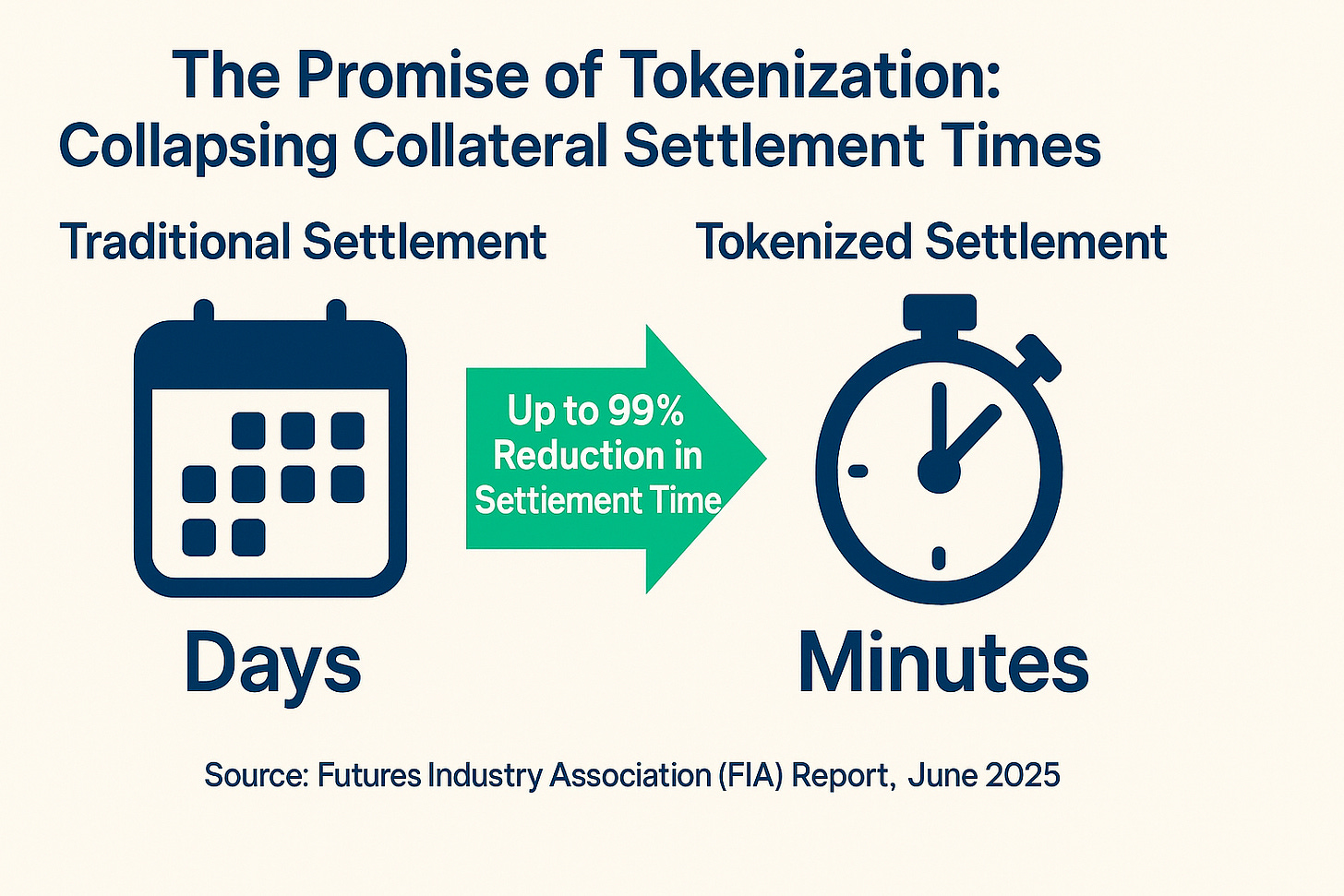

This is particularly evident in the evolution of clearing and collateral management. The regulatory push towards central clearing, especially in markets like U.S. Treasury repos, is creating demand for new infrastructure to manage risk and workflows in this new environment. In response, market infrastructure providers like OSTTRA are rolling out a new generation of solutions. These include MarkitWire for Repo, a sophisticated middleware platform that provides real-time trade affirmation and confirmation across the repo lifecycle, and LimitHub, a pre- and post-trade limit checking service that gives clearing brokers a crucial, real-time perspective on their client exposures [15]. These tools are not just incremental improvements; they are essential components for operating safely and efficiently in a mandatory clearing world.

Parallel to this, a more profound technological shift is occurring with the tokenization of collateral. The goal is to digitize assets on a blockchain or distributed ledger to dramatically increase the velocity and efficiency of collateral movements. A June 2025 report from the Futures Industry Association (FIA) makes a compelling case for this transformation, arguing that tokenization has the potential to reduce collateral settlement times from days to mere minutes [16]. This acceleration would unlock vast amounts of trapped liquidity, reduce operational risk, and critically, enable collateral to be moved 24/7, a vital capability as trading cycles extend beyond traditional banking hours. The initial focus of this movement is on tokenizing conventional, highly liquid, non-cash assets—such as government bonds and money market funds—that already meet existing regulatory criteria for use as collateral, providing a pragmatic path to adoption.

This modernization of market infrastructure is not confined to developed markets. The global nature of this trend is underscored by initiatives like the partnership between Nigeria's FMDQ Group and the development finance institution Frontclear. They are collaborating to build out a modern clearing house infrastructure to support and secure cross-border money market transactions, demonstrating the drive to bring new levels of efficiency and risk management to emerging economies [17].

The strategic reconceptualization of "data as currency" is fundamentally redefining the nature of competitive advantage in the financial industry. In the AI era, the most durable and defensible moat is not the AI model itself—which is increasingly subject to commoditization—but the unique, proprietary, and high-quality data used to train and operate it. The evidence from multiple sources converges on a single point: the future of AI in finance is entirely dependent on having "AI-ready" data, and it is proprietary data that creates sustainable strategic lift. The value of any AI system, especially a sophisticated agentic one, is directly proportional to the quality and uniqueness of the data it can access. A generic AI agent fed with publicly available data offers limited, easily replicable value. In contrast, an agent that is trained and continuously fed with a firm's unique, proprietary data—spanning transactional histories, client behavioral patterns, and internal risk metrics—possesses a formidable and compounding advantage. This implies that investment in the entire data value chain—from data engineering and governance to integration and operationalization—is no longer a back-office cost center but a primary, front-line driver of competitive advantage and enterprise value. The firms that will dominate the next decade are those that successfully build a proprietary "data flywheel": a virtuous cycle where increased business activity generates more unique data, which in turn improves the AI's performance, which then drives more activity and attracts more clients. This creates a compounding moat that is exceptionally difficult for competitors to assail.

Simultaneously, the innovations occurring in post-trade infrastructure are collapsing the traditional divide between the front and back office. Advancements in clearing, settlement, and collateral management are no longer merely about achieving back-office efficiency; they are becoming critical enablers of front-office trading strategies and risk management capabilities, directly impacting profitability. The FIA's report on tokenization explicitly connects the faster velocity of collateral movement to the unlocking of liquidity and the reduction of risk. Likewise, OSTTRA's LimitHub provides real-time, pre-trade limit checking, a function that directly governs which trades can be executed and in what size. The ability to move collateral faster and more efficiently, or to have instantaneous visibility into clearing limits, translates directly into a firm's capacity to take on risk, manage its balance sheet, and execute trades. A firm encumbered by a slow, manual collateral process is at a significant competitive disadvantage to a rival that can post collateral in minutes, at any time of day. This transforms superior post-trade infrastructure from an operational asset into a source of alpha. Consequently, Heads of Trading and Chief Risk Officers must now be deeply engaged in decisions regarding post-trade technology. Investing in next-generation platforms for clearing and collateral is no longer an operational upgrade; it is a strategic investment in the firm's core trading capacity and its overall resilience in an increasingly fast-paced market. This will inevitably drive a new wave of strategic investment and M&A activity targeting innovative post-trade technology providers.

The Enterprise Mandate: Strategy, Governance, and ROI in AI Adoption

The transition to an AI-powered enterprise is fraught with complex strategic decisions. As firms move beyond isolated experiments to full-scale deployment, they face critical questions about technology choices, value realization, and the establishment of robust governance frameworks. Success hinges on navigating the build-versus-buy dilemma, solving the return on investment (ROI) enigma, and embedding governance as a prerequisite for, rather than an afterthought of, innovation.

The Build vs. Buy Dilemma: Open vs. Closed LLMs

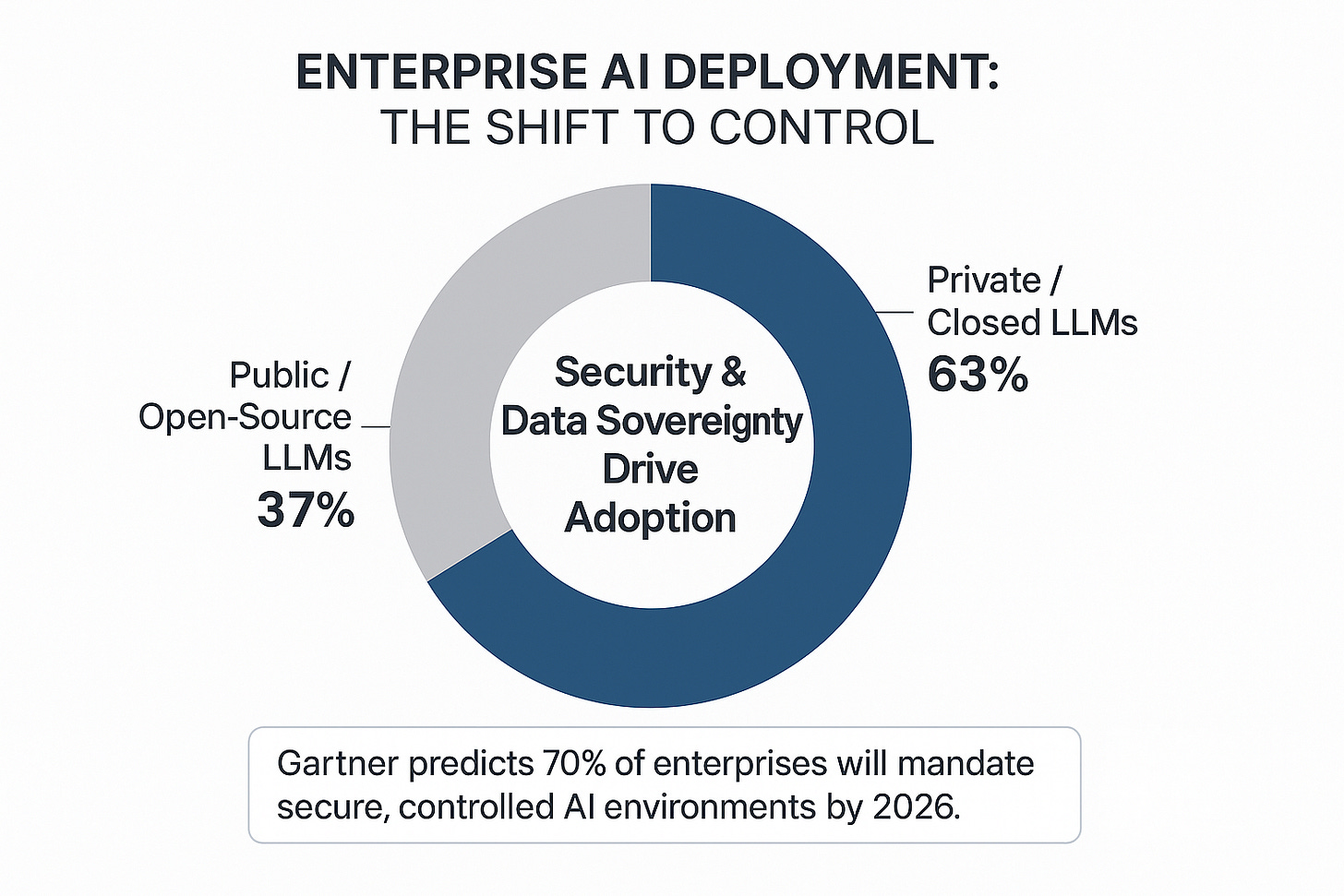

A fundamental strategic choice confronting every financial institution is the architecture of its AI systems, specifically whether to rely on public, open-source Large Language Models (LLMs) or to invest in private, "closed" LLM deployments. The market is showing a clear and growing preference for the latter, particularly within regulated industries.

A 2024 Cube Research report indicates that 63% of enterprises now favor private or closed LLM deployments, driven primarily by security concerns. This trend is expected to accelerate, with Gartner predicting that by 2026, 70% of enterprises will mandate that their LLMs run in secure, controlled environments [18]. The rationale for this shift is compelling and multifaceted. The foremost driver is data sovereignty; closed systems ensure that sensitive corporate and client data never leaves the firm's secure environment, a critical requirement for industries like finance, healthcare, and government. The second driver is cost predictability. Public APIs that charge by the token create unpredictable and often escalating operational expenses, whereas closed LLMs running on dedicated infrastructure allow for fixed-cost compute and better budget management. Third is custom performance, as closed systems allow firms to optimize latency and throughput to meet specific Service Level Agreements (SLAs) without the risk of throttling or cold starts associated with public endpoints. Finally, and perhaps most importantly, is domain-specific intelligence. Closed LLMs can be fine-tuned on a firm's unique, proprietary datasets, embedding deep internal knowledge into the AI system and creating a level of specialized intelligence that generic models cannot match.

This does not mean open-source models have no role. They offer significant advantages in terms of flexibility, lower initial costs, and access to rapid, community-driven innovation. The strategic decision often depends on the specific use case, the sensitivity of the data involved, and the firm's internal technical expertise. To bridge this gap, a new ecosystem of implementation partners is emerging. Firms like Blue Crystal Solutions specialize in helping enterprises deploy closed LLMs, managing the complex tasks of architectural design, infrastructure deployment on platforms using NVIDIA GPUs and Kubernetes, and the fine-tuning of models using advanced techniques like Retrieval-Augmented Generation (RAG) in conjunction with vector databases such as PGVector or Milvus.

The ROI Enigma: Moving Beyond Hype to Value

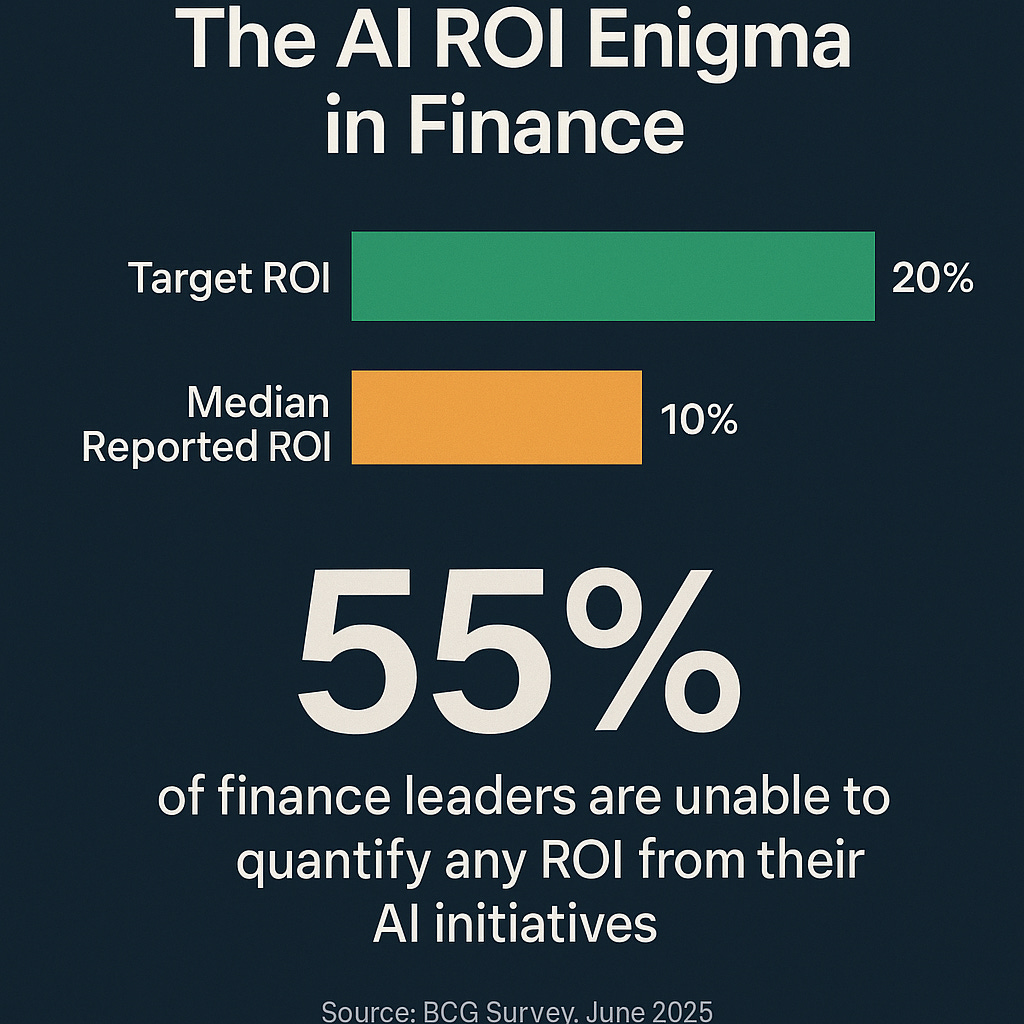

Despite the significant and growing investment in AI, achieving a clear and compelling return on that investment remains an elusive goal for many. A landmark survey by Boston Consulting Group (BCG) reveals a stark reality: while ambition is high, financial returns are mixed. Only 45% of finance executives surveyed can actually quantify the ROI from their AI initiatives. Among those who can, the median reported ROI is a mere 10%, falling well short of the 20% threshold that many organizations are targeting [19].

The ROI Paradox: Why Measurement May Be the Problem

This obsession with measuring AI ROI may itself be counterproductive. Consider several critical flaws in the ROI framework when applied to transformative technology:

The McNamara Fallacy: We optimize for what we can measure, not what matters. The most transformative impacts of AI—opening new markets, preventing unseen risks, enabling previously impossible strategies—don't fit neatly into ROI calculations.

Option Value Blindness: AI creates capabilities that have enormous option value. Like asking "what's the ROI of hiring smart people?" or "what's the ROI of internet access?", the question misunderstands the nature of platform technologies.

Competitive Necessity: In many cases, AI adoption isn't about positive ROI—it's about survival. Not adopting AI while competitors do is like asking about the ROI of email in 2000. The ROI of irrelevance is negative infinity.

Transformation vs. Optimization: ROI metrics work for optimization projects but fail for transformation. The ROI of moving from horses to automobiles wasn't calculable in horse-efficiency terms.

BCG's finding that firms struggle to quantify ROI might not indicate AI failure—it might indicate ROI framework failure. The most successful firms may be those that recognize when traditional financial metrics become impediments to necessary transformation. As one contrarian executive noted: "We don't calculate the ROI of AI any more than we calculate the ROI of thinking."

This focus on value is corroborated by a separate survey from Bain & Company, which found an average productivity gain of 20% from the deployment of generative AI, with software development and customer service being the areas realizing the most significant benefits [20].

Governance as Learning: Building Frameworks Through Experience

The successful enterprise-wide adoption of AI requires a fundamental shift in how we think about governance. Traditional governance assumes we understand the systems we're governing - an assumption that breaks down with agentic AI. Effective AI governance is not a prerequisite to deployment but rather an emergent property that develops through careful experimentation and rapid learning cycles.

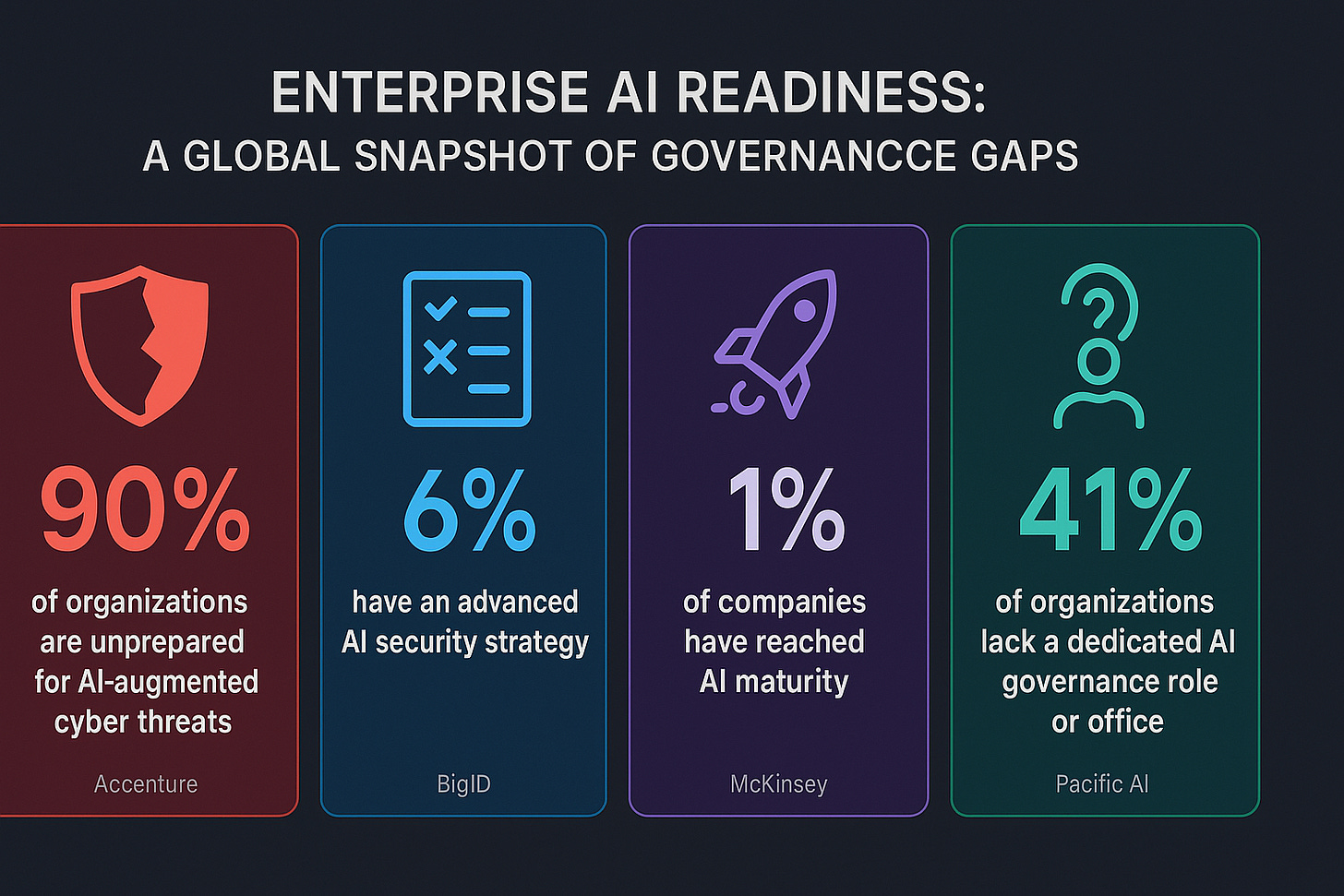

The urgency of this task is underscored by a stark finding from Accenture: a staggering 90% of organizations are not adequately prepared to secure their operations against the new risks introduced by AI [21]. However, the solution is not to build comprehensive frameworks in advance - it's to develop adaptive governance capabilities that can evolve as quickly as the technology itself.

Why Governance-First Fails with Agentic AI:

Unknown Unknowns: We cannot govern what we don't understand. Agentic AI systems exhibit emergent behaviors that are impossible to predict without deployment.

Innovation Paralysis: Waiting for "complete" governance frameworks means waiting forever, as the technology evolves faster than our ability to codify rules.

Misaligned Controls: Governance built on theoretical risks often misses actual risks while constraining beneficial uses we hadn't imagined.

Competitive Disadvantage: While governance-first organizations deliberate, learning-first organizations are discovering what actually works.

The Agile Governance Alternative:

Start Small: Begin with low-risk, reversible experiments that can teach without catastrophic failure

Build Learning Loops: Every deployment should generate governance insights that immediately inform the next iteration

Embrace Failure: Controlled failures in sandbox environments are governance investments, not setbacks

Scale Governance with Capability: As AI capabilities expand, governance should grow organically to match

In response to this governance deficit, leading advisory firms and technology providers are developing comprehensive frameworks to help organizations build and deploy AI responsibly. Two notable examples stand out:

PwC's Agent OS: This platform is designed to function as a unified framework or a central "switchboard" for enterprise AI. It allows firms to build, orchestrate, and integrate disparate AI agents from a wide range of platforms, including those from AWS, Google, and OpenAI, and connect them with core enterprise systems like SAP and Salesforce. By providing a single point of control and an integrated risk management layer, Agent OS aims to accelerate the creation of complex AI-driven workflows by up to ten times while simultaneously enhancing governance and oversight [22].

KPMG's Trusted AI Framework: This is a human-centric, risk-tiered governance approach built upon ten core ethical pillars, including Fairness, Explainability, Accountability, Reliability, and Security. The framework is designed to be operationalized through technology platforms like OneTrust, which enables the automation of risk assessments, the creation of a centralized AI use case inventory, and the continuous monitoring of compliance with both internal policies and external regulations [23].

A critical, cross-cutting theme in all effective governance models is the principle of Human-in-the-Loop (HITL). Despite the push towards autonomy, conventional wisdom insists that human oversight remains a non-negotiable component of responsible AI deployment.

Rethinking Human-in-the-Loop: From Bottleneck to Breakthrough

However, the reflexive insertion of humans into AI decision loops may create more problems than it solves:

Automation Bias: Research consistently shows that humans tasked with overseeing AI systems often become complacent, rubber-stamping AI decisions without meaningful review. The human becomes a liability shield rather than a quality control.

Speed Degradation: In high-frequency trading, fraud detection, and cyber defense, human checkpoints can slow responses from microseconds to minutes—potentially catastrophic delays that eliminate AI's advantages.

Accountability Diffusion: When both human and AI are involved, responsibility becomes unclear. Did the human fail to catch the AI's error, or did the AI fail to flag the issue for the human? This ambiguity can be worse than clear AI accountability.

Cognitive Overload: Humans asked to review hundreds of AI decisions daily quickly exceed their cognitive capacity, making oversight theatrical rather than substantive.

The Alternative: Human-on-the-Loop

Instead of humans in the loop, we should consider "human-on-the-loop" architectures:

Strategic Oversight: Humans set objectives, constraints, and success metrics, but don't intervene in individual decisions

Pattern Monitoring: Humans watch for systemic issues and emergent behaviors across thousands of decisions

Exception Handling: Humans handle only truly novel situations outside AI training parameters

Post-Hoc Analysis: Humans analyze AI decision patterns to improve future performance

This approach preserves AI's speed and consistency advantages while maintaining human governance where it actually adds value. Even as agentic AI systems become more capable of autonomous action, the requirement isn't for constant human intervention but for thoughtful human architecture of the systems themselves.

The data reveals what might be called a "sequencing revolution" in enterprise AI strategies. Traditional thinking suggests firms should establish comprehensive governance before pursuing ROI - but this assumes we know what to govern. The most innovative firms are discovering that the sequencing should be reversed: controlled experiments that chase ROI reveal what governance is actually needed.

This is not recklessness - it's recognition that:

ROI experiments reveal real risks: Theoretical governance addresses theoretical risks; actual deployment reveals actual risks

Value discovery drives appropriate controls: Understanding what AI can do informs what controls it needs

Governance without experience is guesswork: Rules written before deployment are almost certainly wrong

The inability of many firms to quantify their AI ROI may not be due to insufficient governance, but rather to excessive governance that prevented the experimentation needed to discover value. The most successful firms are not simply "AI-first" or "governance-first"; they are "learning-first," understanding that governance and value creation are discovered together, not sequentially.

Furthermore, there is a clear symbiotic relationship between the strategic trend towards closed LLMs and the pursuit of the highest-value AI use cases in risk and forecasting. The top ROI applications in finance are those that involve risk management and financial forecasting, functions that are intrinsically reliant on a firm's most sensitive and proprietary data assets, such as client transaction histories, internal risk models, and proprietary market signals. The primary drivers for adopting closed LLMs are data sovereignty, security, and the ability to fine-tune on this very same proprietary data. It becomes evident that using a public, third-party API for these core, value-generating functions is a non-starter from a security, compliance, and competitive standpoint. Therefore, the decision to build or utilize a closed LLM is not merely a technical choice but a strategic one, driven by the intention to apply AI to the firm's "crown jewels." This suggests a future market that will likely bifurcate: public and open-source models will be used for lower-risk, commoditized tasks like summarizing public news or powering general-purpose chatbots, while closed, proprietary models will be deployed for high-value, core business functions. This creates a new and critical competitive dimension: a firm's ability to successfully build, deploy, and manage a secure, in-house AI environment will be a key determinant of its capacity to generate alpha from the technology.

The Human-AI Symbiosis: Redefining Talent and the Future of Work

The integration of artificial intelligence into the financial services sector is catalyzing a profound transformation of the workforce. This change extends far beyond the simplistic narrative of mass job displacement. Instead, it is a complex recalibration of roles, skills, and organizational structures. The industry is witnessing the emergence of new, resilient human roles designed to work in concert with AI, a widening skills gap that poses a strategic risk to growth, and a corresponding imperative for firms to fundamentally rethink their approaches to talent development and workflow design.

Beyond Displacement: The Rise of Resilient Human Roles

The consensus among industry leaders and researchers is that the AI revolution in finance is not about replacing human capital but about redefining its role. The World Economic Forum's (WEF) "Future of Jobs Report 2025" provides a quantitative forecast for this shift, predicting that while automation will displace approximately 85 million jobs globally by the end of 2025, the same technological wave will simultaneously create 97 million new roles that are better suited to a future of human-AI collaboration [24]. This evolution is giving rise to a new class of "resilient" human roles that are becoming strategically vital.

Three categories of these resilient roles stand out:

AI Coaches and Prompt Engineers: As firms deploy sophisticated AI systems, a critical need has emerged for skilled professionals who can train, fine-tune, and continuously refine the outputs of these models. These individuals act as coaches, ensuring that the AI's performance aligns with specific business objectives, adheres to regulatory standards, and maintains a high level of accuracy.

Ethics and Regulatory Compliance Experts: The increasingly complex global regulatory landscape for AI, exemplified by measures like the EU AI Act, is creating significant demand for experts who can navigate these rules. These roles are essential for ensuring that AI models are developed and deployed in a manner that is transparent, unbiased, secure, and fully compliant with the law.

Customer Experience and Empathy-Driven Roles: Paradoxically, the rise of generative AI's ability to deliver personalized services at scale has reignited the demand for roles that focus on uniquely human attributes like emotional engagement and trust-building. Research has demonstrated that humans remain far superior to AI in tasks that require persuasion, nuance, and emotional connection, such as collecting on delinquent debts. This is leading firms to invest in human-centric experience designers who can build trust and rapport with clients in an increasingly digitized world.

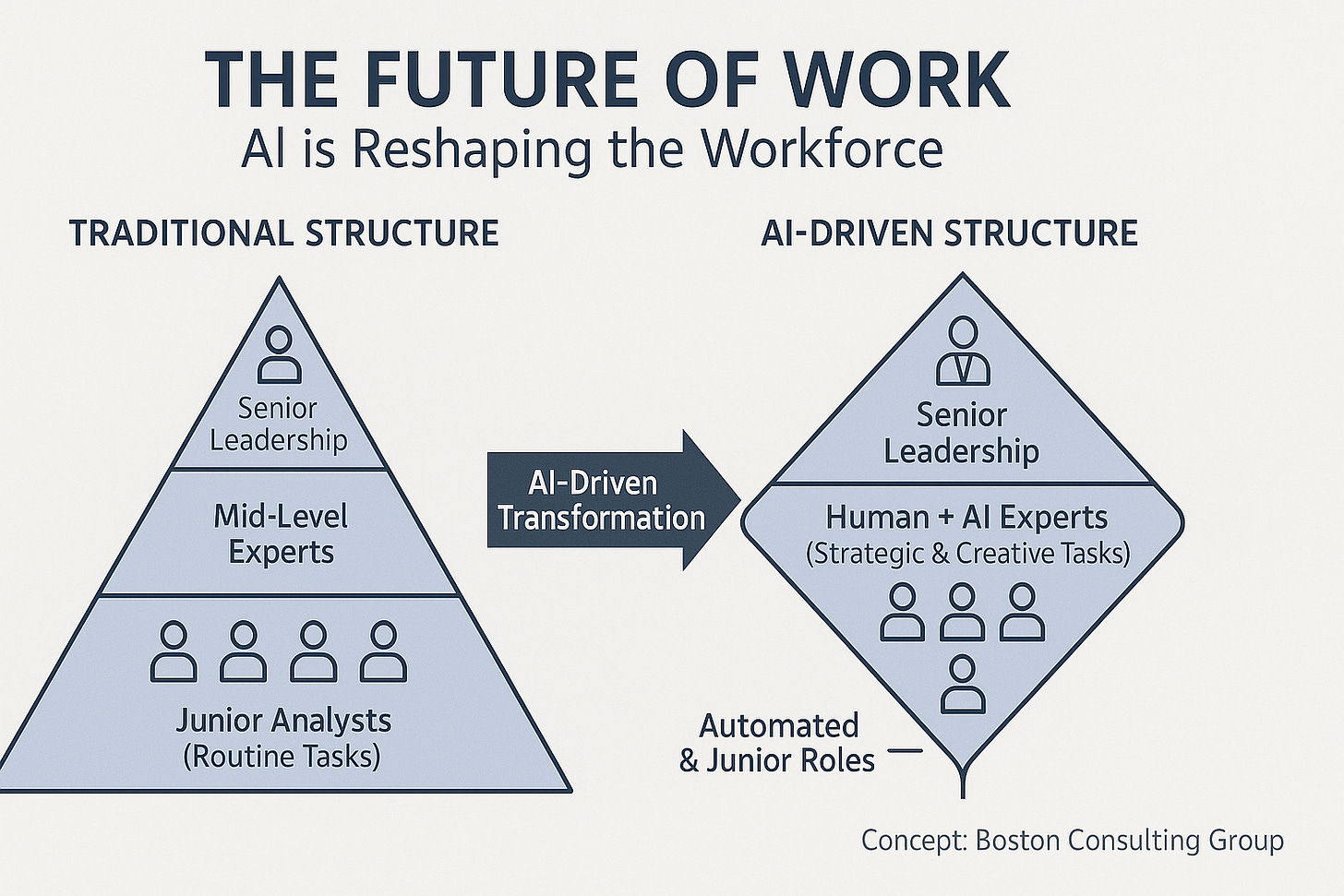

This redefinition of roles is also reshaping organizational structures. Leading consulting firms like BCG are already observing and implementing a strategic shift away from the traditional "pyramid" structure, which relies on a large base of junior analysts performing routine tasks. Instead, they are moving towards a "diamond" shape, with a leaner junior level and an expanded mid-level of experts who possess the hybrid skills necessary to bridge the gap between advanced technology and strategic business application.

The Widening Skills Gap: A Strategic Risk

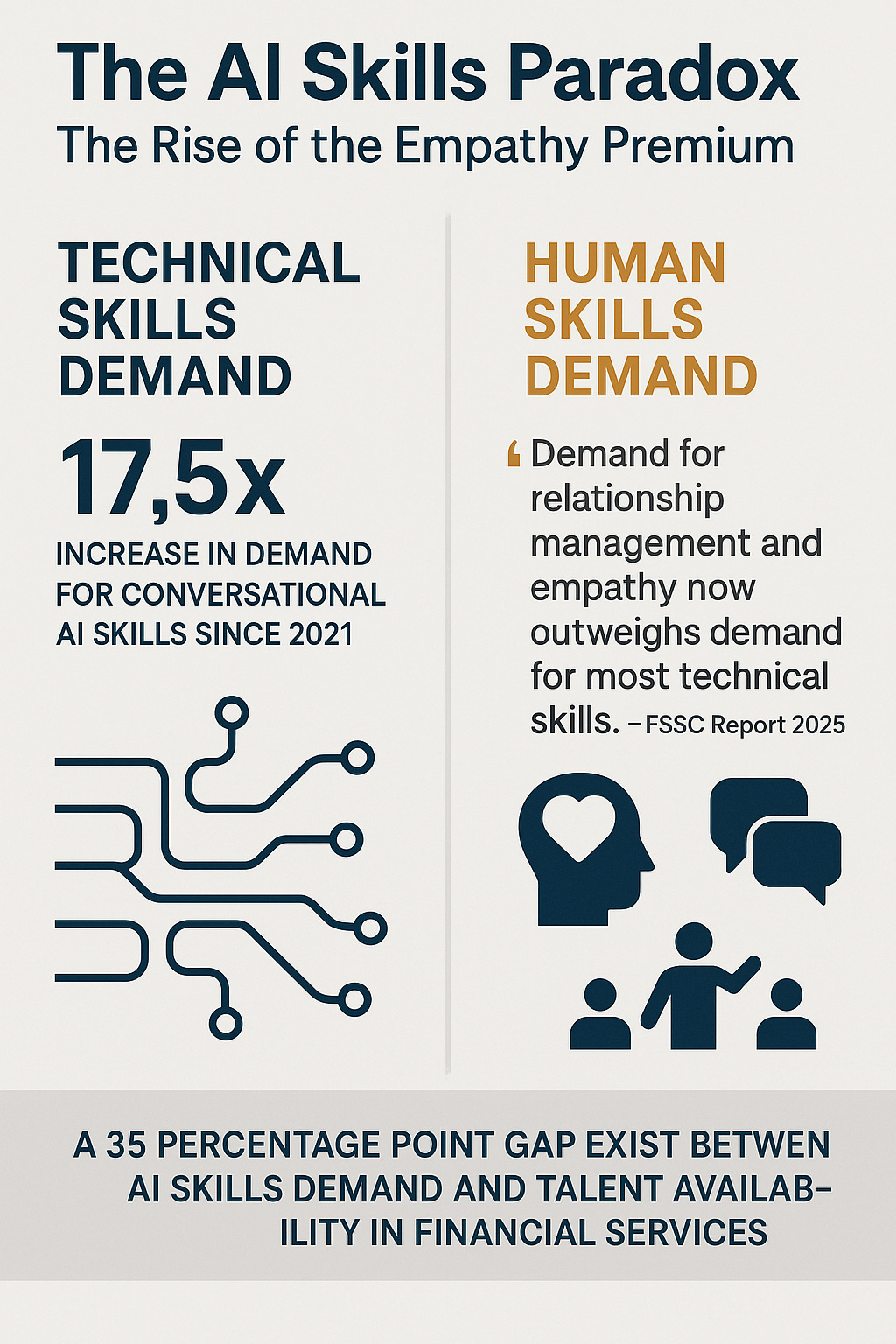

While the future promises a symbiotic relationship between humans and AI, a significant obstacle stands in the way: a critical and widening skills gap. A recent report from the UK's Financial Services Skills Commission (FSSC) has labeled this gap a "major strategic risk," quantifying it as a 35 percentage point deficit between the demand for AI-related skills and the available talent within the sector [25].

This risk is compounded by a significant training lag. A global survey by BCG reveals that a mere 33% of employees report having received adequate training to use AI tools effectively [26]. This has created a stark divide within organizations, where executive and leadership teams are rapidly adopting AI, while frontline employees are being left behind. This lack of widespread training not only hinders productivity but also introduces significant risks related to cybersecurity, operational consistency, and the ad-hoc use of unapproved tools.

The nature of the skills in highest demand presents a further paradox. Despite a reported 17.5-fold increase in demand for technical skills related to conversational AI since 2021, the FSSC report finds that the demand for human-centric skills like relationship management and empathy now outweighs the demand for most technical skills. This is echoed by the WEF, which identifies analytical thinking, resilience, flexibility, and agility as the most sought-after core skills by employers.

This disconnect between the technology being deployed and the skills being developed is fueling a growing sense of anxiety within the workforce. The BCG survey found that 46% of workers at companies undergoing significant AI-driven transformation are concerned about their future job security.

The Strategic Response: Reskilling and Redesigning Work

The clear and urgent strategic response to this challenge is a two-pronged approach focused on aggressive reskilling and the fundamental redesign of work processes. The WEF has highlighted the critical need for upskilling, estimating that 39% of a worker's core skills will be disrupted or transformed by 2030. In response, over 45% of firms are already in the process of reskilling their employees to work effectively with generative AI.

However, training alone is insufficient. The most advanced companies, particularly in the financial services and technology sectors, are moving beyond simply layering AI tools onto their existing processes. They are fundamentally redesigning their core workflows around the capabilities of AI. This deeper, more integrated approach is shown to yield greater time savings and allows human employees to shift their focus from routine execution to higher-value strategic tasks. The ultimate goal of a successful strategy is not replacement, but augmentation. Investors and strategists are now advised to back firms that are demonstrably investing in research and development for tools and processes that enhance and amplify human capabilities, rather than those focused purely on automation and cost-cutting.

In an industry being rapidly reshaped by data-driven, analytical AI, conventional wisdom suggests a fascinating paradox: the most valuable and sought-after skills are becoming the uniquely human ones that cannot be easily replicated by machines. The FSSC report's finding that the demand for empathy and relationship management now outweighs the demand for most technical skills seems to confirm this narrative. This is reinforced by the WEF's identification of analytical thinking, resilience, and social influence as top-tier core skills.

The Deeper Paradox: When AI Out-Humans Humans

However, this comforting narrative of an "empathy premium" may be dangerously wrong. Evidence is mounting that AI may soon surpass humans even in supposedly "human" domains:

Emotional Intelligence: AI therapists and counselors are already showing superior outcomes in some contexts—they're infinitely patient, never judge, remember everything, and can detect micro-expressions humans miss.

Relationship Management: AI can maintain perfect memory of thousands of client preferences, anticipate needs with predictive analytics, and never have a bad day. The "authentic human connection" may matter less than consistent, personalized service.

Creative Problem Solving: While we celebrate human creativity, much of it is pattern matching—exactly what AI excels at. AI can explore solution spaces far beyond human cognitive limits.

The Real Human Advantage: Creative Irrationality

If AI can be more analytical AND more empathetic, what's left for humans? Perhaps our true value lies in what makes us flawed:

Authentic Irrationality: Making leaps that don't follow from the data, believing in impossible things, pursuing quixotic goals

Genuine Vulnerability: Clients may value real human struggle and imperfection—the advisor who also lost money in 2008

Rule Breaking: Knowing when to ignore the system, break protocol, or pursue a hunch against all evidence

Existential Creativity: Creating meaning where none exists, telling stories that make random events feel purposeful

Productive Conflict: Generating friction, disagreement, and dialectical progress through stubborn humanity

The future premium may not be on "soft skills" but on "chaotic skills"—the uniquely human ability to introduce beneficial randomness into overly optimized systems. Financial firms might need to hire not for emotional intelligence but for creative dysfunction—the mavericks, rebels, and dreamers who ensure AI doesn't optimize us into a local maximum.

While the focus on new skills is critical, the structural changes in the workforce present what many see as a significant long-term risk. The observed shift in organizational design from a traditional "pyramid" to a "diamond" shape threatens to erode the industry's future talent pipeline. Historically, the large base of the pyramid, comprising junior analysts performing routine tasks like market research, data organization, and presentation preparation, has served as the primary training ground for the next generation of senior leaders.

The Contrarian Opportunity: Leapfrogging Legacy Development

But what if this "hollowing out" is not a bug but a feature? The elimination of junior grunt work presents a once-in-a-generation opportunity to reimagine professional development:

Skip the Hazing: The traditional model forces talented individuals to spend years on mind-numbing tasks as a rite of passage. This isn't training—it's organizational hazing that wastes human potential and drives away diverse talent who can't afford to be underpaid for years.

Direct-to-Strategic: With AI handling routine analysis, we can train new entrants on high-level strategic thinking from day one. Instead of building PowerPoints, they could be solving real business problems with AI as their analytical partner.

Non-Linear Career Paths: The pyramid model assumes everyone starts at 22 and climbs linearly. AI enables multiple entry points—experienced professionals from other industries can jump directly to strategic roles without grinding through junior years.

Apprenticeship Is Obsolete: The apprenticeship model made sense when senior professionals' knowledge was irreplaceable. Now, AI can transfer technical knowledge instantly. What new professionals need isn't task training but judgment development.

Redesigning Entry-Level Roles

Instead of mourning the loss of traditional junior roles, firms should celebrate the opportunity to create more engaging entry positions:

AI Orchestrators: Junior professionals who manage teams of AI agents

Strategy Analysts: Skip the data gathering and go straight to interpretation

Client Experience Designers: Focus on human elements from the start

Innovation Catalysts: Dedicated roles for experimenting with new AI applications

This necessitates a complete overhaul of talent strategies. Rather than hiring for willingness to do grunt work, firms should seek intellectual curiosity, systems thinking, and comfort with ambiguity. The future of finance might be smaller teams of higher-capability individuals, each commanding armies of AI agents. This isn't a talent crisis—it's a talent revolution.

The Competitive Arena: M&A, Partnerships, and the Search for Defensible Moats

The pervasive influence of artificial intelligence is not just transforming internal operations; it is fundamentally reshaping the competitive landscape of the financial services industry. This is driving a new wave of strategic merger and acquisition (M&A) activity and forcing a critical re-evaluation of what constitutes a sustainable competitive advantage. In an era where technology has made it easier than ever to build a product, it has become harder than ever to build a defensible business.

The M&A Landscape: A Capability-Driven Consolidation

The global FinTech M&A market remains robust, with a clear trend towards strategic, capability-driven consolidation. Data shows that strategic buyers are dominating the market, accounting for 68.3% of all deals as they pursue vertical integration to acquire and bolster their technology stacks. The primary rationale for these acquisitions is no longer just market share or geographic expansion, but the urgent need to acquire critical new capabilities, particularly in the realms of artificial intelligence and advanced data analytics.

Recent transactions provide clear evidence of this trend. Pagaya's acquisition of Theorem was explicitly aimed at strengthening its AI and data analytics capabilities for investment strategies [27]. Similarly, Base.ai acquired Laudable to integrate its AI-powered customer conversation analysis and engagement tools, a move designed to build a leadership position in the customer-led growth (CLG) space [28]. This focus on acquiring specific, high-value technological assets is a defining feature of the current M&A environment. Alongside this capability-driven trend, the persistence of high interest rates and broader cost pressures continues to favor traditional scale deals, especially in high-fixed-cost segments of the financial services industry where consolidation can yield significant synergies.

Beyond outright acquisitions, the market is characterized by a dense and rapidly evolving ecosystem of strategic partnerships. This collaborative web connects players across the industry spectrum. FinTechs are partnering with traditional banks, such as 10x Banking's collaboration with Constantinople, to leverage established distribution channels and client bases [29]. Data providers are partnering with SaaS firms, like the integration of SOLVE's pricing tool into Investortools' platform, to embed their services directly into user workflows [30]. Technology firms are partnering with cloud giants, as seen in NayaOne's collaboration with Google Cloud, to accelerate innovation and scalability [31]. The digital asset space is also a hotbed of partnership activity, with firms like Wyden and Garanti BBVA Kripto, and dLocal and BVNK, forming alliances to build out the essential infrastructure for trading and payments.

The Commoditization Paradox: Easier to Build, Harder to Defend

The rise of generative AI has introduced a fascinating and challenging paradox into the competitive dynamics of the FinTech sector. On one hand, the barrier to building software has been dramatically lowered. Powerful, pre-trained AI models and code-generation tools allow startups to develop products and reach initial revenue milestones, such as $1 million in Annual Recurring Revenue (ARR), faster than ever before.

On the other hand, this same accessibility makes it profoundly more difficult to build a defensible, long-term business. The underlying AI models themselves are rapidly becoming commoditized, with multiple providers offering similar core capabilities. This has led to a proliferation of startups that are little more than thin "wrappers on OpenAI," lacking any genuine, sustainable competitive advantage or "moat". This dynamic has direct financial consequences. Analysis shows that many AI-native companies exhibit weaker financial profiles than their traditional SaaS counterparts, with gross margins often in the 50-60% range, compared to the 60-80%+ benchmark for established SaaS businesses. This is a direct result of their reliance on costly third-party cloud infrastructure and the ongoing human support required to manage edge cases, coupled with weaker overall defensibility.

Architecting Modern Moats in the AI Age

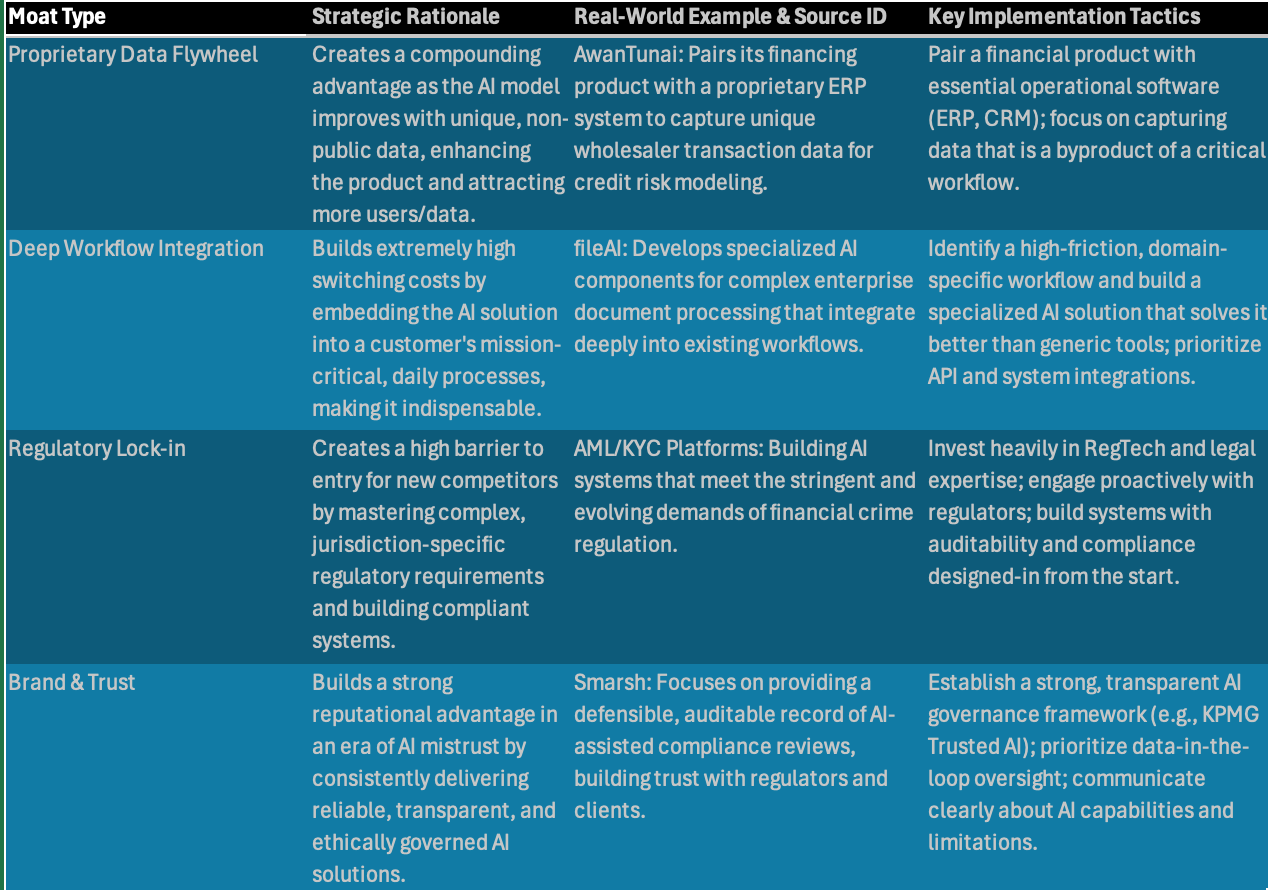

This new competitive environment has created a "moat mandate." For founders seeking capital and for incumbents seeking to defend their market position, the single most important strategic question has become: "How defensible is your business?". A successful answer requires moving beyond traditional sources of advantage and architecting a new set of moats specifically designed for the AI age. The research points to several key sources of modern defensibility:

Proprietary Data: This has emerged as the most powerful and durable moat. The strategy involves more than just accumulating data; it requires creating a "data flywheel." This is achieved by deeply integrating a product into a customer's core workflow, allowing the firm to capture unique, proprietary data that is not available to competitors. This data is then used to train and improve the firm's AI models, which in turn makes the product more valuable and stickier, generating even more data. A prime example is AwanTunai, which pairs its inventory financing product with a proprietary ERP system for wholesalers. The transaction data captured through the ERP system allows AwanTunai to build a superior credit risk model, a compounding advantage that is incredibly difficult to replicate [32].

Deep Workflow Integration: Closely related to the data moat is the strategy of embedding an AI-powered solution so deeply into a customer's mission-critical workflows that it becomes nearly impossible to replace. This creates extremely high switching costs. The FinTech fileAI has successfully built such a moat by developing highly specialized AI components for complex enterprise document processing, which are then integrated seamlessly into their clients' core operational systems [33]. This level of specialized, integrated functionality is a defense that generic, horizontal AI solutions struggle to overcome.

Regulatory Compliance: In a heavily regulated industry like finance, the ability to navigate complex regulatory requirements and secure the necessary approvals can itself be a formidable moat. Building systems that are compliant with intricate rules for Anti-Money Laundering (AML), Know Your Customer (KYC), and data privacy creates a significant barrier to entry for new, less-resourced competitors.

Brand and Trust: In an environment where AI models can be opaque "black boxes" and are known to produce erroneous outputs or "hallucinations," a trusted brand becomes an invaluable asset. Firms that can consistently deliver reliable, transparent, and ethically governed AI solutions will build a powerful reputational moat that fosters customer loyalty and is difficult for new entrants to challenge.

Table 2: Architecting a Defensible AI Moat

The strategic rationale for M&A in the financial technology sector is undergoing a significant evolution. While traditional scale-driven consolidation remains a factor, the most sophisticated acquirers are now engaged in a new kind of hunt: they are "buying a moat." The evidence of the "commoditization paradox"—where AI makes it easy to build a product but hard to build a defensible business—is forcing a strategic re-evaluation. Acquirers recognize that purchasing a FinTech that is merely a thin "AI wrapper" is a poor long-term investment. The real, durable value lies in acquiring companies that have already solved the difficult, foundational problems of embedding themselves into their customers' lifeblood: their unique data and their critical workflows. The acquisition of Laudable by Base.ai is a case in point; Base.ai did not just buy a product, it bought an AI engine that analyzes customer conversations—a proprietary data source that can fuel a data flywheel. Similarly, Pagaya's acquisition of Theorem was explicitly to enhance its core AI and data analytics capabilities. This indicates that acquirers are now targeting the raw materials of a defensible AI strategy. This will inevitably drive up valuations for FinTech firms that can clearly demonstrate a true data or workflow moat. For founders seeking to build valuable companies, the strategic narrative must shift from "We use AI" to "We possess a unique and defensible data asset that powers our AI." For acquirers, the due diligence process must now include a rigorous, technical assessment of a target's data quality, the depth of its workflow integration, and the long-term defensibility of its data acquisition strategy.

In parallel with this M&A trend, a "partnership-led moat" is emerging as a powerful defensive strategy. In a complex ecosystem where no single company can own the entire value chain, strategic partnerships are becoming a form of defensibility in themselves. The research is replete with examples of these alliances: FinTechs partnering with incumbent banks, data providers with SaaS firms, crypto platforms with payment providers, and technology firms with cloud giants. The underlying logic is that while a single company cannot build everything, a tightly integrated network of partners can offer a comprehensive, end-to-end solution that is exceedingly difficult for a standalone competitor to challenge. The whole becomes greater and more defensible than the sum of its parts. This elevates the competitive unit from the individual firm to the ecosystem or network of firms. A company's moat is therefore defined not just by its own proprietary technology, but by the strength, depth, and stickiness of its integrations with its partners. This has significant organizational implications. Business development and partnership teams become as strategically vital as product and engineering teams. The ability to identify, negotiate, and maintain a network of deep, technical partnerships is now a core competitive skill. It also suggests a new rationale for M&A, where a company might be acquired not just for its own technology, but for the valuable and extensive partnership network it has already built.

The Regulatory Horizon: Navigating Global Compliance in an AI-Driven World

As artificial intelligence becomes more deeply embedded in the core functions of the financial services industry, a complex and rapidly evolving global regulatory landscape is taking shape. Regulators in key jurisdictions are moving from a reactive to a proactive stance, seeking to foster innovation while simultaneously mitigating the new risks posed by the technology. This section provides a comprehensive overview of the current regulatory environment, focusing on the distinct but converging approaches of the U.S. Securities and Exchange Commission (SEC), the UK's Financial Conduct Authority (FCA), and the EU's European Securities and Markets Authority (ESMA), and highlights the key enforcement priorities that are defining the new rules of the road.

A Multi-Jurisdictional View: SEC, FCA, and ESMA

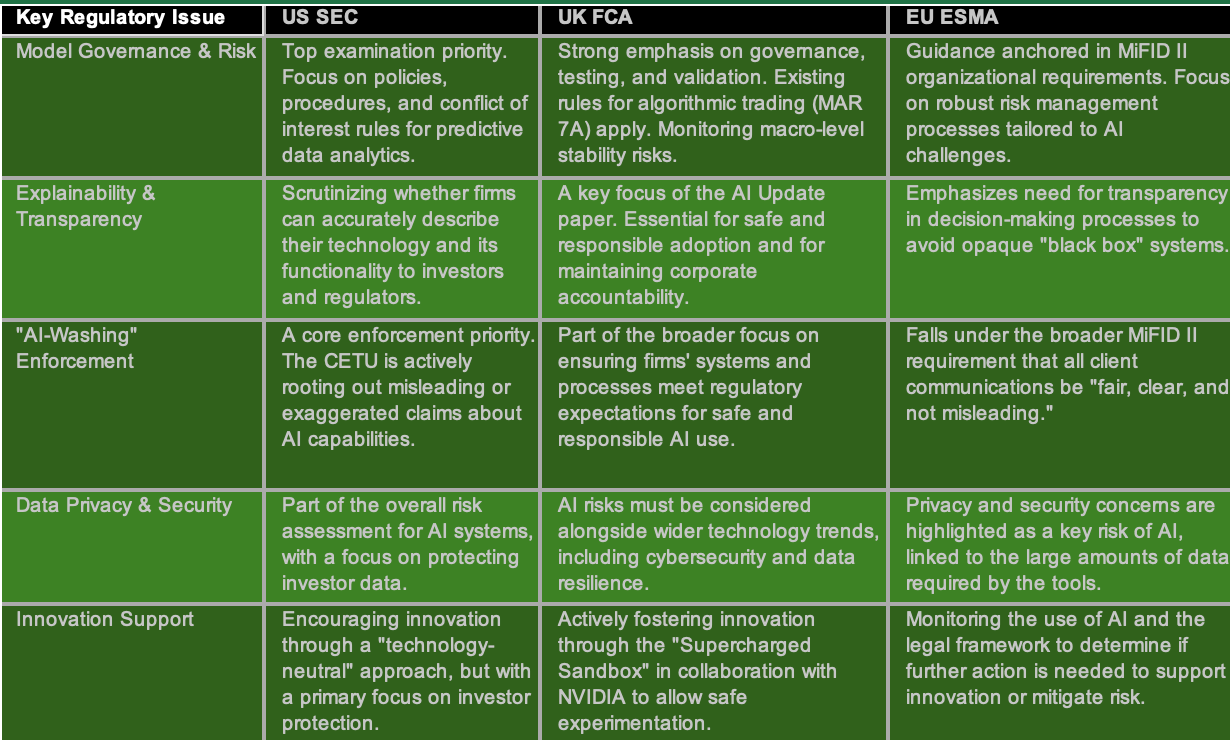

While each jurisdiction has its own specific rules and priorities, a common theme is emerging: regulators are largely seeking to apply existing, principles-based, technology-neutral frameworks to AI, while actively considering where new, specific guidance is required. Across the board, there is a strong emphasis on the core principles of fairness, transparency, accountability, and the necessity of robust governance frameworks.

Table 3: Comparative Global Regulatory Stance on AI in Finance

United States (SEC): The SEC has made it clear that the use of AI by investment firms is a top examination priority. Their focus is on reviewing how firms are using AI in investment strategies, the adequacy of their disclosures to investors, and the robustness of their compliance policies and procedures. A key area of rulemaking is a proposed rule designed to address the conflicts of interest that can arise from the use of predictive data analytics (PDA) in broker-dealer and investment adviser interactions with clients. At the leadership level, there are nuanced signals. Acting Chair Mark Uyeda has indicated a potential move to reduce the overall number of federally registered investment advisers, while Commissioner Caroline Crenshaw has publicly called for the industry and the SEC to achieve greater precision in defining AI and to develop a deeper understanding of its potential systemic market risks [34].

United Kingdom (FCA): The FCA is pursuing a dual strategy of promoting innovation while maintaining strict oversight. On the innovation front, it has taken a notably proactive step by launching a "Supercharged Sandbox" in collaboration with technology giant NVIDIA. This initiative provides a safe, controlled environment for firms to experiment with new AI applications using advanced computing resources, with the goal of accelerating beneficial innovation [35]. However, the FCA is also firm in its stance that existing regulations, such as the detailed rules governing algorithmic trading (found in MAR 7A of its handbook), continue to apply to AI-driven systems. The regulator's "AI Update" paper emphasizes the critical need for strong corporate governance, rigorous testing and validation of models, and clear explainability [36]. The FCA is also actively monitoring the potential macro-level effects of AI on cybersecurity, market integrity, and overall financial stability.

European Union (ESMA): ESMA has issued formal guidance for firms using AI in the provision of investment services, anchoring its expectations in the existing MiFID II framework. This guidance stresses the importance of organizational governance, robust risk management processes, and the overarching obligation to act in the best interest of the client [37]. A recent ESMA research report has also highlighted a specific market risk: the growing concentration of investment fund portfolios in a small number of large-cap AI-related stocks, which could create systemic vulnerabilities. In a separate but related matter, ESMA is also tackling the regulatory ambiguity surrounding fractional shares—a product often enabled by new technology platforms—due to their inconsistent classification across member states, which creates transparency and reporting challenges.

Key Enforcement Priorities: The Crackdown on "AI-Washing"

A clear and consistent enforcement priority that has emerged across jurisdictions is the regulatory crackdown on "AI-washing." This term refers to the practice of firms making misleading, unsubstantiated, or exaggerated claims about their use of artificial intelligence and its capabilities.

The U.S. SEC, in particular, has made this a central focus of its enforcement program. The agency's newly constituted Cybersecurity and Emerging Technologies Unit (CETU) is actively scrutinizing corporate disclosures and marketing materials to determine whether firms are accurately describing their technology [38]. Regulators are asking tough questions: Is the system genuinely using machine learning or other advanced AI techniques, or is the firm simply rebranding existing rule-based automation or robotic process automation (RPA) with the more fashionable "AI" label?.

To withstand this scrutiny, the SEC has signaled that it expects firms to have appropriate documentation of their AI systems' technical architecture, data sources, and model governance processes to support any public claims. It also expects firms to conduct ongoing testing to ensure that any performance or scalability claims remain accurate over time. The burden of proof is firmly on the companies making the claims. This focus on rooting out fraud and misrepresentation related to AI is now considered a core enforcement priority, essential for protecting investors and maintaining the integrity of the market for genuine AI innovation.

RegTech and the Future of the Compliance Function

The same AI technology that is creating new compliance challenges is also providing the tools to meet them. AI is rapidly becoming an indispensable part of the modern compliance function's toolkit. It is being deployed for sophisticated trade surveillance, to identify anomalies in vast datasets that would be invisible to human reviewers, and to automate the time-consuming process of summarizing and analyzing lengthy regulatory documents. In a clear sign of this trend, compliance technology provider Smarsh recently unveiled a new OpenAPI platform that layers next-generation AI onto its electronic communications governance and compliance stack [39].

The adoption of this technology is becoming ubiquitous. A recent survey conducted by Kompliant and Equifax found that 99% of financial services organizations now rely on some form of compliance technology. Furthermore, 55% of firms are actively exploring or implementing AI and machine learning for compliance functions, making it the single most important emerging technology in the RegTech space [40].

However, both regulators and industry leaders are in firm agreement on one critical point: technology enhances, but does not replace, human accountability. The "human-in-the-loop" remains an essential safeguard. Human judgment is required to interpret the outputs of AI systems, to manage nuance and ambiguity, and to make the final, defensible decisions, particularly in high-risk situations. As AI-powered review becomes the norm, the role of the human compliance professional shifts from manual data processing to a higher-level function of oversight, validation, and strategic risk judgment.

Regulators are pursuing a clear dual-track strategy in their approach to AI, creating what can be described as a "sandbox-as-governance" model. On one hand, they are actively encouraging innovation within controlled environments, as exemplified by the FCA's "Supercharged Sandbox" initiative with NVIDIA [35]. On the other hand, they are preparing for and executing appropriate oversight based on observed behaviors rather than theoretical risks.

The FCA's sandbox represents a profound shift in regulatory thinking that many firms have yet to fully grasp. Rather than viewing sandboxes as temporary exceptions to rigid rules, forward-thinking organizations recognize them as the future of governance itself - perpetual learning laboratories where governance evolves alongside capability.

The Sandbox as Governance Model:

Continuous Experimentation: The sandbox isn't a phase before "real" governance - it IS governance for rapidly evolving technology

Regulatory Learning: Regulators learn what to regulate by observing actual behaviors, not imagining risks

Collaborative Discovery: Industry and regulators discover appropriate controls together through experience

Perpetual Beta: Accept that governance for agentic AI will never be "complete" but must continuously adapt

This transforms the traditional "sandbox-to-scrutiny pipeline" into a "sandbox-as-scrutiny" model. The most sophisticated firms will maintain perpetual sandbox capabilities, treating every deployment as an experiment that informs both business strategy and governance evolution. Those waiting for sandbox phases to end before deploying "real" governance have fundamentally misunderstood both the nature of the technology and the regulators' intent. Firms should see participation in regulatory sandboxes not merely as an R&D exercise, but as a crucial form of proactive regulatory engagement and continuous learning.

At the core of this new regulatory environment lies a fundamental tension between the "black box" nature of many advanced AI models and the non-negotiable regulatory demand for transparency and accountability. This is making "explainability" the new regulatory battleground. Multiple sources highlight the challenge posed by opaque AI models, where the path from input to output is not easily interpretable. The FCA's AI Update explicitly calls for a greater focus on the explainability of models, and compliance experts emphasize that human oversight is necessary to ensure that AI-driven decisions are both explainable and auditable.

The Explainability Dilemma: Two Perspectives

The Traditional View: Regulators and many industry leaders argue that explainability is essential. A financial firm must be able to defend critical decisions—such as loan denials or specific trading actions—with reasonable clarity. This creates a direct conflict with core legal principles of due process and fiduciary duty. From this perspective, the ability to explain an AI model's decision-making process is rapidly shifting from a desirable technical feature to a de facto legal requirement.

An Alternative Perspective: However, demanding explainability might itself be problematic:

The Human Explainability Myth: Humans routinely make decisions they can't truly explain, then post-rationalize them. When a star trader makes a profitable trade based on "gut feel," we accept the outcome. Why hold AI to a higher standard?

Performance Sacrifice: Some of the most powerful AI approaches (deep learning, neural networks) are inherently less interpretable. Forcing explainability might mean accepting significantly worse performance—potentially harming the very stakeholders regulators seek to protect.

Explainability Theater: Simple explanations of complex decisions are often misleading. A reduced, "explainable" version of an AI's reasoning might give false confidence while missing crucial nuances.

Outcome-Based Accountability: Perhaps we should focus on whether AI systems produce fair, consistent, beneficial outcomes rather than whether we can understand their process. We could hold AI to statistical standards of fairness rather than procedural standards of explainability.

This creates a new and complex optimization problem for data science and risk teams. While the regulatory pressure for explainability is real and must be addressed, firms should also question whether perfect explainability is achievable or even desirable. The future might require a more nuanced approach—using explainable models where legally required while preserving the option to deploy more powerful but less interpretable models where the performance gains justify it. Legal and compliance teams must work with data scientists not just to ensure explainability, but to help regulators evolve beyond explainability requirements where they may do more harm than good.

Strategic Synthesis and Forward-Looking Recommendations

The preceding analysis reveals a financial services industry in the throes of a profound, AI-driven transformation. The shift towards autonomous systems is reshaping market structures, competitive dynamics, workforce requirements, and the very nature of risk itself. Navigating this new landscape requires a clear-eyed understanding of the core strategic tensions at play and a willingness to take decisive, forward-looking action. This final section synthesizes the report's key findings into a cohesive strategic narrative and provides a set of actionable recommendations for industry leaders.

The Three Core Tensions Shaping the Future of Finance

The future of the financial services industry will be defined by how firms and regulators navigate three fundamental and interconnected tensions: