In a world where AI decisions must be justified to regulators, auditors, and customers, one class of algorithms provides both accuracy and the ability to explain why

Summary

Everyone demands AI explanations, but most AI is black-box. Boosting techniques explain everything.

Interpretability isn't optional anymore—regulators, customers, boards increasingly require explanations for AI decisions.

Like medical specialists building diagnosis together, boosting algorithms layer expertise while explaining each step.

From 99% fraud detection to competitive moats—interpretable AI transforms compliance burden into business advantage.

Gradient boosting delivers both performance and explanations. It's defining financial AI's future standard.

The Stakeholder Imperative

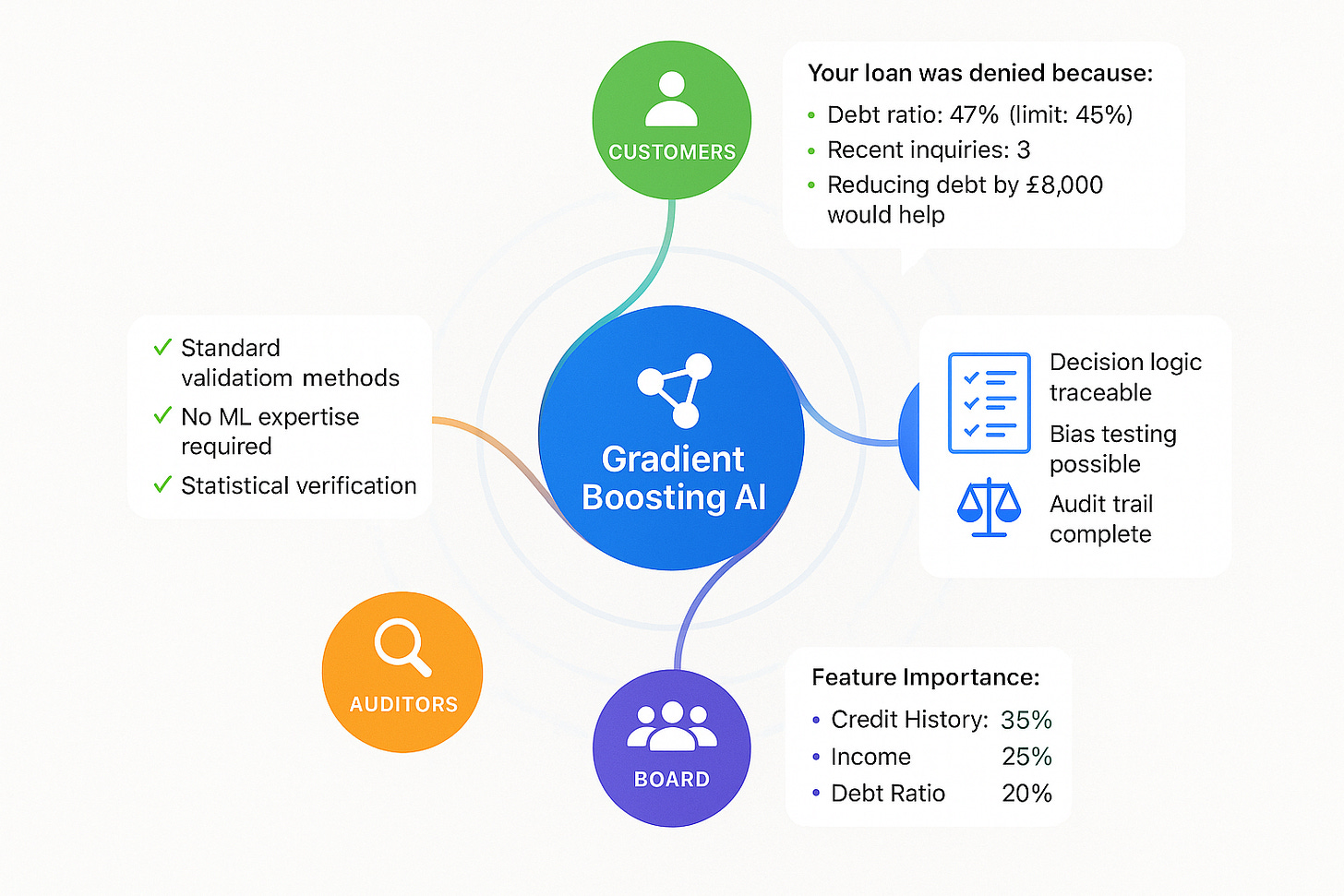

Board members want to understand AI risks. Customers demand explanations for adverse decisions. Regulators require algorithmic transparency. Auditors need traceable logic. The stakeholder pressure for explainable AI isn't coming—it's here.

Gradient boosting models account for 32% of all AI applications in UK financial services—more than any other model type, according to the Bank of England's 2024 survey [3]. This dominance isn't coincidental. While headlines focus on neural networks and generative AI, the algorithms quietly powering financial institutions share a crucial advantage: they can explain every decision they make.

This interpretability isn't a nice-to-have feature—it's becoming a business imperative. When AI systems determine loan approvals, flag suspicious transactions, or identify market anomalies, multiple stakeholders demand explanations. Traditional machine learning forces institutions to choose between accuracy and explainability. Gradient boosting eliminates this trade-off entirely.

The competitive implications are profound. While competitors struggle to explain their AI decisions, institutions leveraging boosting's natural interpretability are transforming explanatory requirements from obstacles into advantages.

The Medical Specialist Analogy: Building Diagnosis Through Expertise

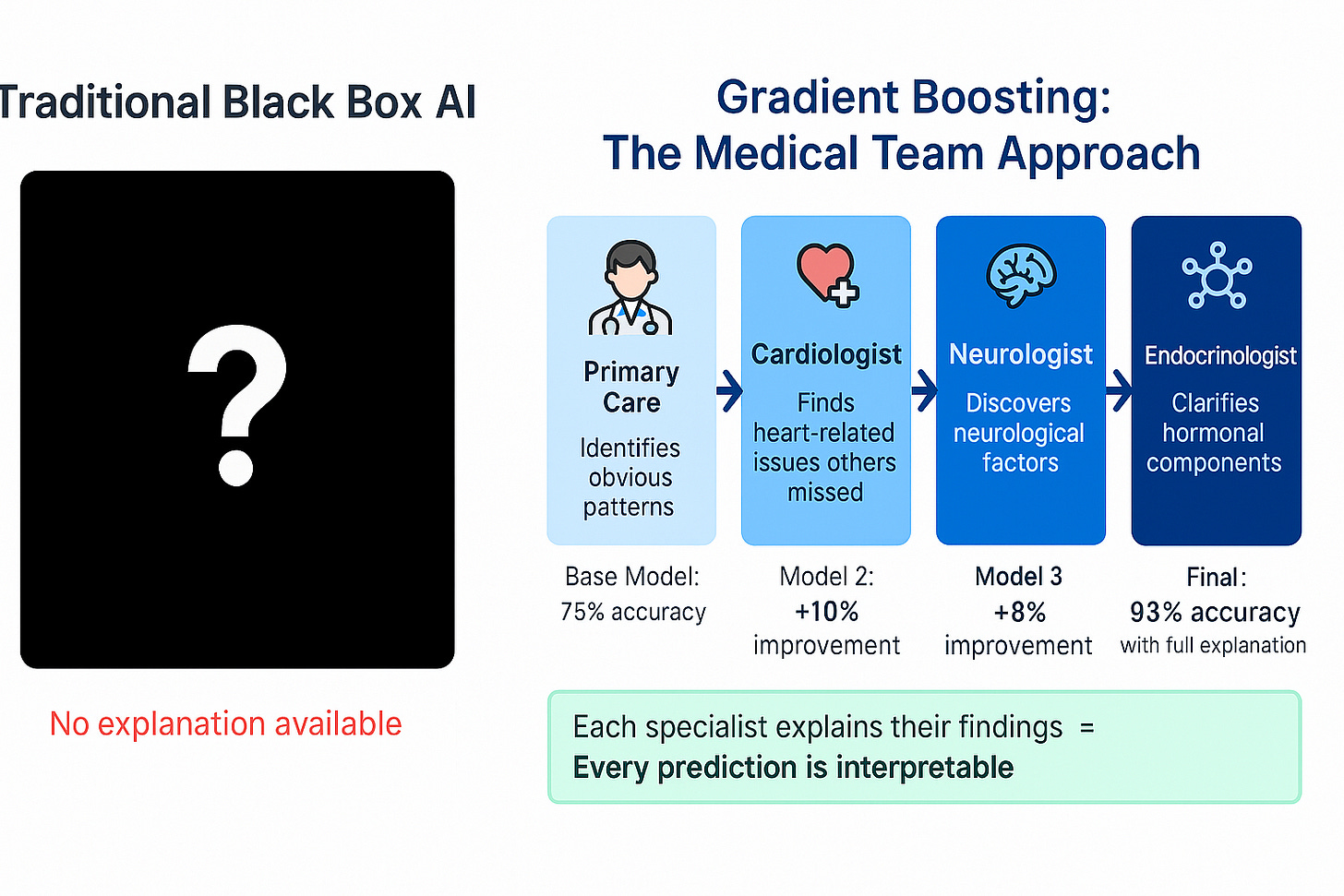

Imagine you're experiencing complex symptoms that have your primary care physician puzzled. Rather than guessing, she refers you to a cardiologist who identifies potential heart rhythm irregularities but admits the symptoms don't fully match typical cardiac patterns.

The cardiologist then consults with a neurologist, who focuses specifically on the symptoms the cardiac specialist couldn't explain. The neurologist discovers signs pointing to neurological involvement but recognizes that some symptoms still remain unexplained.

Next, an endocrinologist joins the case, concentrating precisely on what both previous specialists missed. Each doctor builds upon the others' findings, becoming increasingly specialized in addressing the gaps left by their predecessors.

Eventually, you have a team of specialists where each expert contributes a piece of the diagnostic puzzle. The cardiologist explains the cardiovascular aspects, the neurologist details the neurological components, and the endocrinologist clarifies the hormonal factors. Together, they provide a comprehensive diagnosis that any of them could explain to you, your family, or a medical review board.

This is exactly how gradient boosting algorithms work. Each "specialist" in the ensemble focuses on correcting the errors made by previous models, building an increasingly accurate and sophisticated decision-making system. But unlike a neural network black box, every component can explain its reasoning:

First Model: Identifies obvious patterns (like the primary care physician)

Second Model: Focuses specifically on cases the first model misclassified

Third Model: Specializes in errors still remaining after combining the first two

Continuing: Until the ensemble achieves desired accuracy

The result is a system where each prediction comes with a detailed explanation of contributing factors—crucial when regulators, auditors, or customers demand to understand AI decisions.

The Natural Interpretability of Ensemble Learning

What makes boosting uniquely valuable for regulated financial services isn't just performance—it's the algorithm's inherent ability to explain its reasoning. This interpretability emerges naturally from the ensemble structure rather than being bolted on as an afterthought.

Consider SHAP (SHapley Additive exPlanations) values, now integrated directly into XGBoost and LightGBM packages [1]. These provide mathematically rigorous explanations for individual predictions, showing exactly how much each feature contributed to the final decision. Unlike post-hoc explainability techniques that guess at black-box reasoning, SHAP values derive directly from the boosting algorithm's tree structure.

A recent study on credit risk assessment using LightGBM achieved 87% accuracy while providing clear decision rationales through LIME and SHAP explanations [2]. Each loan decision could be traced to specific factors—debt-to-equity ratios, cash flow patterns, employment history—with quantified importance scores. This transparency isn't a compliance checkbox; it's a fundamental feature of how the algorithm works.

The Bank of England's 2024 survey found that gradient boosting models account for 32% of all AI applications in UK financial services—more than any other model type [3]. The reason isn't regulatory virtue; it's practical necessity. When your AI decisions affect customer outcomes, regulatory standing, and business liability, explainability isn't optional.

Performance With Explanations: Real-World Evidence

The interpretability advantage of boosting becomes compelling when combined with superior performance across financial applications. Recent implementations demonstrate that institutions don't have to choose between accuracy and explainability:

Fraud Detection: A stacking ensemble combining XGBoost, LightGBM, and CatBoost achieved 99% accuracy in credit card fraud detection while maintaining full explainability through SHAP and LIME techniques [4]. The system processes transactions in real-time while providing clear explanations for each fraud alert. When a customer disputes a blocked transaction, customer service representatives can immediately explain the specific factors that triggered the fraud detection—unusual merchant categories, atypical spending amounts, or suspicious geographic patterns.

Imagine you are the Chief Risk Officer at a regional bank, reviewing the monthly model performance report. Your fraud detection system has achieved 99% accuracy with zero false positive complaints escalated to senior management. When board members ask how the system works, you can walk them through actual decisions: "This transaction was flagged because it combined three unusual patterns—a £2,000 purchase at 3 AM from a merchant category the customer had never used before, in a geographic location 200 miles from their normal spending area, using a payment method they rarely use. Each factor individually might be acceptable, but the combination triggered our alert."

This level of detail transforms risk management from reactive compliance to proactive business intelligence.

Credit Risk Assessment: Financial institutions using optimized XGBoost for credit scoring report 79.5% accuracy with comprehensive explanations for every lending decision [5]. Each credit assessment includes detailed analysis of contributing factors, their relative importance, and confidence intervals. When customers are denied loans, institutions can provide specific, actionable feedback rather than generic rejections.

Anti-Money Laundering: The Bank of Canada implemented a two-stage LightGBM framework for anomaly detection in their payment system [6]. The gradient boosting classifier outperformed traditional logistic regression by 44% while correctly detecting 93% of artificially manipulated transactions. Crucially, each alert comes with detailed explanations of suspicious patterns—unusual timing, atypical amounts, irregular counterparties—enabling efficient investigation and regulatory reporting.

As we explored in our previous analysis of institutional adoption [7], these performance improvements aren't marginal—they represent fundamental advantages that compound over time into sustainable competitive moats.

The Stakeholder Communication Advantage

Beyond regulatory compliance, boosting's interpretability creates advantages across multiple stakeholder relationships that traditional AI approaches cannot match:

Customer Trust: When a mortgage application is declined, customers deserve explanations beyond "the computer said no." Boosting algorithms can provide specific, actionable feedback: "Your debt-to-income ratio of 47% exceeded our 45% threshold, and recent credit inquiries raised additional concerns. Reducing debt by £8,000 or increasing income by £15,000 would likely change this outcome."

Board Oversight: Directors increasingly demand to understand AI systems affecting business risk. Boosting models provide intuitive explanations that non-technical board members can grasp. Feature importance charts show which factors drive decisions. Decision trees illustrate the logical flow. Model performance can be validated through interpretable metrics.

Audit Defense: External auditors examining AI-driven processes can trace decision logic through transparent algorithms. Unlike neural networks requiring specialized expertise to audit, boosting models can be validated using standard statistical techniques. Auditors can sample decisions, examine feature contributions, and verify model logic without becoming machine learning experts.

Regulatory Relations: Building trust with regulators requires demonstrating that AI systems operate predictably and fairly. Boosting algorithms enable this through consistent explainability. Regulators can examine model documentation, validate decision processes, and understand how algorithms respond to different scenarios.

Implementation Strategy: Building Interpretable AI Systems

For financial institutions seeking to leverage boosting's interpretability advantages, several strategic considerations determine success:

Documentation Architecture: Every boosting model requires comprehensive documentation that non-technical stakeholders can understand. This includes feature descriptions, importance rankings, performance metrics, and decision examples. Unlike black-box models where documentation is largely theoretical, boosting enables concrete explanations of algorithmic behavior.

Validation Frameworks: Interpretable AI enables more robust validation processes. Model validators can examine feature importance, understand decision boundaries, and test edge cases systematically. Changes in model behavior become immediately visible through shifting importance scores and decision tree structures.

Stakeholder Training: Teams need to understand how to interpret and communicate boosting model outputs. This includes compliance officers explaining decisions to regulators, customer service representatives discussing AI outcomes with clients, and risk managers validating model behavior with audit committees.

Monitoring Systems: The interpretability of boosting models enables sophisticated monitoring that detects model drift, bias emergence, and performance degradation. Feature importance shifts signal when models need retraining. Decision pattern changes indicate when business conditions have evolved beyond model assumptions.

The Competitive Moat of Explanation

As we discussed in our analysis of boosting's transformation of capital markets [8], the competitive advantages extend beyond individual applications to systemic institutional capabilities. Organizations that can deploy accurate AI while explaining every decision gain multiple advantages:

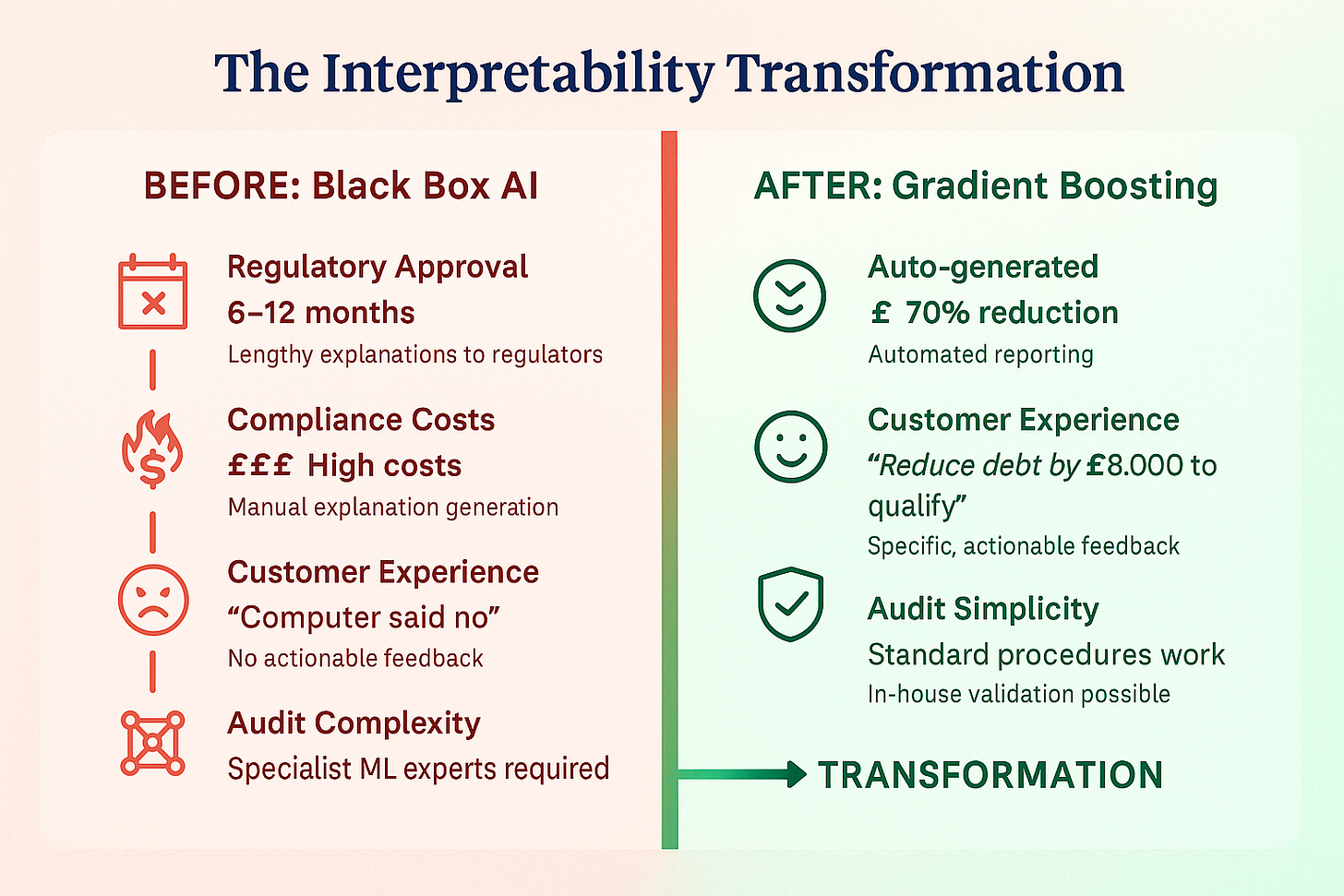

Faster Innovation Cycles: When regulators understand your AI systems, approval processes accelerate. New applications can be deployed more quickly when decision logic is transparent and validateable.

Reduced Compliance Costs: Interpretable AI reduces the resources required for regulatory reporting, audit preparation, and investigation responses. Automated explanation generation eliminates manual analysis for routine inquiries.

Enhanced Risk Management: Understanding why AI systems make specific decisions enables better risk assessment and control. Model behavior can be validated against business logic. Edge cases can be identified and addressed proactively.

Customer Satisfaction: Transparent AI decisions build customer trust and reduce complaints. When outcomes can be explained clearly, customers are more likely to accept adverse decisions and more confident in favorable ones.

Technical Implementation: Making Boosting Work

The practical deployment of interpretable boosting systems requires attention to both algorithmic performance and explanation quality:

Feature Engineering: Boosting algorithms excel with well-engineered features that have business meaning. Unlike neural networks that can work with raw data, boosting benefits from features that domain experts can understand and validate.

Hyperparameter Tuning: Modern boosting implementations like LightGBM can achieve microsecond inference latencies when properly optimized [9]. This enables real-time applications while maintaining full explainability—a combination that neural networks cannot match at comparable speeds.

Explanation Integration: SHAP values and feature importance scores should be integrated into business workflows, not treated as separate analytical outputs. Customer-facing systems need automated explanation generation. Compliance systems require explanation archiving and retrieval.

Model Governance: Interpretable AI enables stronger governance frameworks. Model changes can be validated through explanation consistency. Business rules can be encoded as constraints on feature importance. Model behavior can be audited through systematic explanation analysis.

Looking Forward: The Interpretability Imperative

The demand for explainable AI in financial services will only intensify. Customer expectations for transparency are rising. Regulatory scrutiny of algorithmic decisions is increasing. Board oversight of AI systems is becoming standard practice.

In this environment, boosting algorithms provide sustainable competitive advantages. They deliver the performance financial institutions need while enabling the explanations that stakeholders demand. Early adopters are already seeing benefits: faster regulatory approval, stronger audit performance, enhanced customer trust, and operational efficiency.

The choice facing financial institutions isn't whether to adopt interpretable AI—market forces are making that decision inevitable. The choice is which approaches can deliver both performance and explainability in an increasingly demanding environment.

For institutions serious about AI-driven transformation while maintaining stakeholder trust, boosting offers a proven path forward. The algorithms are mature, the infrastructure is available, and the interpretability advantages are fundamental to the approach.

Conclusion

As financial markets demand both AI sophistication and decision transparency, gradient boosting algorithms have emerged as the optimal solution for institutions that cannot afford to choose between performance and explainability.

The evidence is compelling. From 99% fraud detection accuracy with full explanations to 44% improvements in anomaly detection with reduced compliance workload, boosting delivers measurable advantages across critical financial applications. More importantly, these benefits compound over time into sustainable competitive moats that black-box approaches cannot replicate.

The interpretability revolution in financial AI isn't coming—it's already here. Gradient boosting isn't just participating—it's defining the standard for what explainable high-performance AI looks like in practice.

If you found this valuable, forward it to a colleague who's navigating AI transformation. They'll thank you for the clarity amidst the hype.

Subscribe to Lucidate’s Substack

Get the signal through the noise on AI's real impact on financial markets

This analysis builds on extensive case study research and performance data from financial institutions worldwide. The interpretability advantages discussed represent fundamental algorithmic properties rather than temporary competitive benefits.

References

[1] Interpretable Machine Learning with XGBoost https://medium.com/data-science/interpretable-machine-learning-with-xgboost-9ec80d148d27

[2] Explainable AI based LightGBM prediction model to predict default borrower in social lending platform https://www.sciencedirect.com/science/article/pii/S2667305325000407

[3] Bank of England – Artificial intelligence in UK financial services (2024) https://www.bankofengland.co.uk/report/2024/artificial-intelligence-in-uk-financial-services-2024

[4] Financial Fraud Detection Using Explainable AI and Stacking Ensemble Methods https://arxiv.org/html/2505.10050v1

[5] A Comparative Performance Assessment of Ensemble Learning for Credit Scoring https://www.researchgate.net/publication/346201975_A_Comparative_Performance_Assessment_of_Ensemble_Learning_for_Credit_Scoring

[6] Bank of Canada – Finding a needle in a haystack: A Machine Learning Framework for Anomaly Detection in Payment Systems https://www.bankofcanada.ca/wp-content/uploads/2024/05/swp2024-15.pdf

[7] Building Better Predictions: How Boosting Is Quietly Transforming Capital Markets https://lucidate.substack.com/

[8] The Quiet Revolution: How Machine Learning Boosting is Reshaping Capital Markets https://lucidate.substack.com/

[9] Ultra‑low Latency XGBoost with Xelera Silva https://www.xelera.io/post/ultra-low-latency-xgboost-with-xelera-silva